As the famed psychologist Abraham Maslow once said, “If all you have is a hammer, everything looks like a nail.”

IT monitoring and observability systems make it easy to create alerts for all kinds of events and conditions. It can be tempting to create alerts for all of them, but this can quickly lead to problems:

Choosing and Using Alerts Wisely

Organizations often apply a “more is better” mindset when creating alerts. IT teams should instead focus on a “small is beautiful” approach that reduces the number of notifications by grouping correlated alerts and automatically sifts through them to bring attention only to those alerts that indicate a real problem. There are a number of questions to consider when pursuing this strategy.

Above all, organizations want to avoid generating incidents for their own sake. This can’t help but lead to “alert fatigue,” causing IT staff to close tickets just to clear the backlog. It’s more important to make sure tickets get generated—and actions are taken in response—when something meaningful has happened. Anything else wastes time and effort, increasing overall service costs (or “ticket-closing costs”). A couple of examples should demonstrate how closing incidents may not always be desirable or represent a good investment of time and effort. Hopefully this raises the question: why issue so many incidents in the first place?

Example 1: Exceeding a Meaningless Threshold

In keeping with standard policy, an alert comes up showing one server disk is 90% full. An IT staffer is assigned, logs in, checks the disk space, and manages to recover a mere 2MB after 30 minutes of investigation and cleanup. She then determines that it's normal and acceptable for this disk to run at 90% capacity. She closes the incident and moves on to her next assignment. Unfortunately, this means the same incident gets generated and elicits the same response next month, and the month after, and… lather, rinse, and repeat forever.

A smart, anomaly-based alerting system would alleviate this situation by recognizing the 90% capacity as "normal" and ceasing to generate alerts at this baseline. If such a system is not deployed, the correct response here - after clearing the incident - would be to file a change request to inform management that the threshold for this disk is set too low. Establishing a higher threshold value that will prompt a meaningful response, such as 95% or 99%, might be useful. On the other hand, a request for a bigger disk might also be warranted. In general, anything IT can do to reduce the number of spurious alerts will help staffers focus on more important alerts, thereby improving efficiency and saving on overall IT costs.

Example 2: Lost in Alert Overload

Imagine a situation where an organization has created dozens of alerts for its IT staff to handle. These alerts are poorly prioritized and lack sufficient context for IT staffers to separate hair-on-fire situations from smoldering embers. A monitoring and observability system that can correlate related alerts from different aspects of the environment and provide context-based information can reduce the number of alerts and focus on what's important. Without a clear sense of which alerts are most dire, IT staffers waste too much time fixing things that don’t adversely affect the business in a significant way. Worse, these same IT staffers sometimes overlook or don’t get around to resolving tickets costing the organization time, money, and customers.

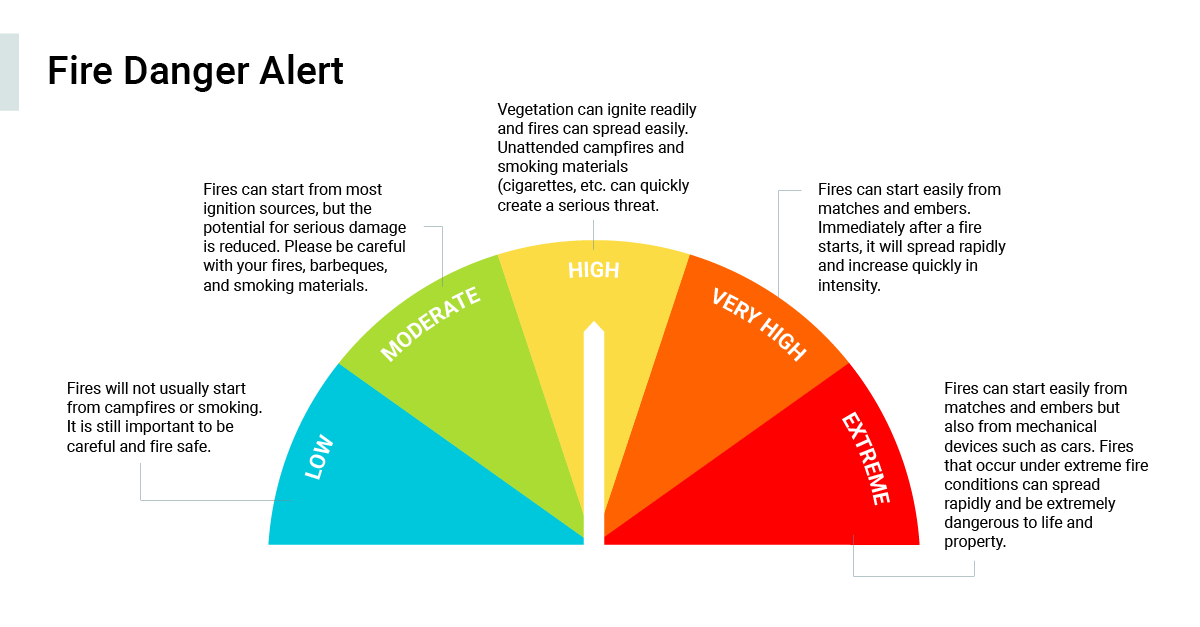

A proper IT operations management (ITOM) environment includes context with alerts so IT staff can understand the conditions, events, or issues reported within the bigger picture of what’s expected within the organization’s systems. This helps to make sense of how the alert diverges from the norm and what it could portend. Likewise, it’s essential to impose a classification scheme on alerts to help with their priority (e.g., critical, severe, routine, informational) so prioritization and focus naturally fall where they’re most needed. Figure 1 shows a common classification scheme used for fire danger alerts.

This is an area where data mining, anomaly detection, and metric correlation are all helpful in keeping pending alerts in order of priority. These technologies use artificial intelligence (AI) and machine learning (ML) to provide added insight, benefit from prior history and current observations, and help organizations put their efforts into closing tickets capable of providing the most favorable outcomes.

Because some alerts truly threaten business losses or reputational damage, they must take priority over those posing lower risks. This makes constant, ongoing alert classification and prioritization vital to any good ITOM environment. Ultimately, this also lowers the overall cost of providing IT services and support, where saved time pays a double benefit in enabling staff to work on things capable of improving productivity, profitability, and efficiency.Ensuring the Health of Your IT Infrastructure

Choosing which alerts to signal in an ITOM environment and how to handle them is essential for ensuring the health of your IT infrastructure and the productivity and efficiency of your operations. The same applies to how and when pending alerts get classified and prioritized. This produces inevitable cost savings and frees up IT resources for more proactive and innovative activities, helping IT teams avoid reacting to events as they occur.

Researching monitoring and management tools? Take a look at SolarWinds Observability Self-Hosted (formerly known as Hybrid Cloud Observability). It can help you reduce alert fatigue, ensure the most urgent issues get the most immediate attention, and lower the overall cost of IT delivery.