This article was updated on June 19, 2024

Historically, there was a clear delineation between what system administrators (SysAdmins) do and what application developers are responsible for in IT organizations. In recent years—especially in organizations focused on software development—these worlds have come together as IT operations and development teams adopt DevOps practices. The concept of site reliability engineering (SRE) was first introduced by a much-discussed book titled Site Reliability Engineering from Google. The concepts of SRE and what the SRE role does are similar to a SysAdmin but with some additional development skills and experience. Whether they’re ensuring applications and services meet their service-level agreements (SLAs) in the face of hardware outages or are building automation to auto-scale services, SREs treat operations problems as development opportunities in their workflow. This methodology helps improve system reliability and the productivity of end users and development teams. As a system administrator who’s moved into more of an architect role, I've witnessed this culture change firsthand. Good system administrators always had a toolkit of shell scripts they shared and modified to help automate as many operations tasks in their production systems as possible. Over time, however, the frameworks for implementing automation and orchestration tools—like Kubernetes—have led to even more development work as they migrate into SRE roles.What Does an SRE Do?

Since the SRE role combines a traditional SysAdmin or operations engineer role with a developer, the SRE may not write entire applications from scratch. They’re more likely to automate tasks using bash scripts, Python, or any number of other languages. They also work to increase observability across their environment by building observability into their application stack to measure key metrics. As part of the general concepts of site reliability engineering, you’ll need to measure system reliability using these metrics through service-level indicators (SLI) like latency to ensure alignment with your defined service-level objective (SLO). When defining an SLO, you specify key SLIs—such as latency, error rate, and overall throughput—to have a reachable goal. As part of your SLO, you also define a downtime budget, which will help determine the architecture of your application. This downtime budget is a key concept of SRE—all services aren’t expected to have 100% uptime. In fact, dependent services should be sustainable if another service is unavailable—this is an important element of microservices architecture. For example, if your search service is unavailable, the rest of your website or app should still function normally. This downtime, or error budget, also ties into new features the SRE coordinates with the development team. Suppose most of the downtime budget is consumed for a given time frame. In this case, the team may decide to wait to introduce new features (which introduce risk into an otherwise stable environment) until they aren’t worried about breaking the budget.SRE Role Responsibilities

SREs typically spend no more than 50% of their time on operations. In Site Reliability Engineering, several Google engineers mention this number as the key to avoiding toil and frustrating the engineer. The other 50% of their time should be dedicated to project work, including creating new features, improving system scalability, and automating manual tasks like application alerting. If operations are falling out of service, those services should be handled by the development team. Defining ownership of specific tasks can allow SREs to conduct the other facets of their job, like performing post-incident reviews, planning and optimizing on-call rotations, and documenting knowledge in runbooks to be shared with other engineering teams. This practice also helps avoid silos in engineering teams and facilitates more consistent incident response.SRE vs. DevOps

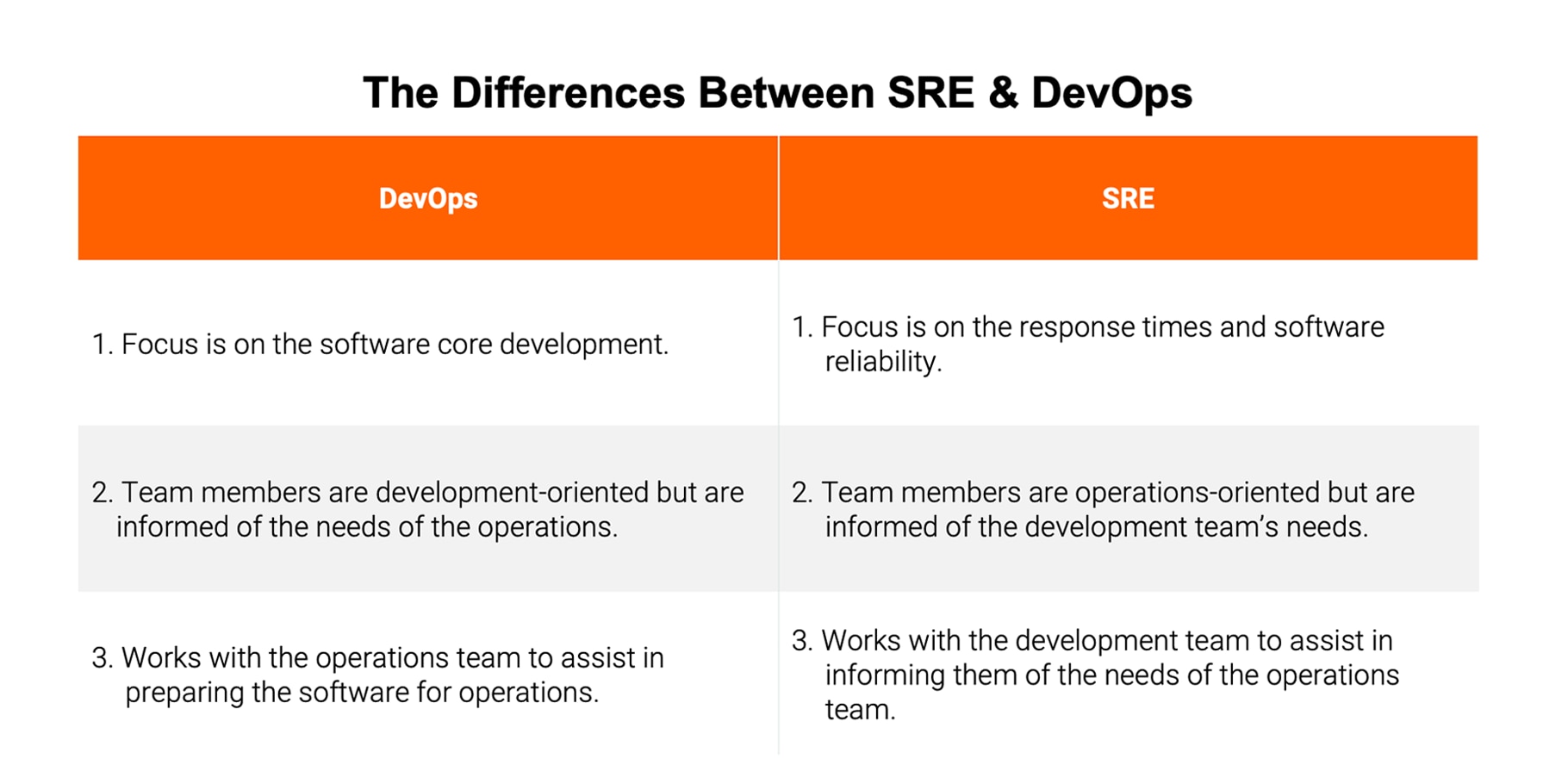

Though the SRE role isn’t purely a development one, SREs still play a key part in DevOps processes and can similarly help organizations realize the benefits of DevOps. In fact, the SRE role itself can be thought of as the physical implementation of DevOps practices. The SRE's role in DevOps is to ensure the apps and services used by the DevOps team are available to end users and applications when needed. Even though there’s a lot of overlap between SRE and DevOps—and the two are often discussed together—they’re two distinct disciplines. DevOps is defined as a set of principles building on the Agile movement and best practices for developing and deploying software. As the name implies, DevOps bridges the gap between the people who write the software applications and the people who keep those applications up and running. DevOps, like SRE, is built on team culture and relationships, which help teams see faster development cycles and far fewer bugs.

SREs help DevOps by sharing their knowledge of software development and managing infrastructure to make recommendations around best practices, and they can also assist directly with code management and monitoring to help improve DevOps applications. SREs can further reduce the communication gap between dev and operations teams, improving the overall infrastructure.

DevOps is defined as a set of principles building on the Agile movement and best practices for developing and deploying software. As the name implies, DevOps bridges the gap between the people who write the software applications and the people who keep those applications up and running. DevOps, like SRE, is built on team culture and relationships, which help teams see faster development cycles and far fewer bugs.

SREs help DevOps by sharing their knowledge of software development and managing infrastructure to make recommendations around best practices, and they can also assist directly with code management and monitoring to help improve DevOps applications. SREs can further reduce the communication gap between dev and operations teams, improving the overall infrastructure.