“How much load can this system sustain?” is a common question in capacity planning. The practical purpose is usually something like the following:

- How soon will the system begin to perform badly as load increases?

- How many servers will I need for the expected holiday load?

- Is this system close to a point of failure?

- Are we over-provisioned? By how much?

Capacity planning is often a difficult problem because it’s hard to tell what a system’s true capacity is. The

Universal Scalability Law can help you estimate this.

Conventional ways to determine system capacity are often difficult, expensive, and don’t give results you can really believe in. For example, you can set up load tests, but it takes a lot of work and time, and the results are suspect because the workload is always artificial in some way.

You can also run benchmarks, but most benchmarks are pretty useless for predicting a system’s usable capacity. In addition to being an artificial workload, they push a system to its maximum throughput and beyond. As I mentioned, it’s rare for benchmarks to be run by people who understand the importance of latency. But when I do see benchmarks that measure latency percentiles, the systems almost always perform very badly at their peak throughput.

Another way I’ve tried to predict system capacity in the past is with queuing theory, using the

Erlang C formula to predict response time at a given utilization. Unfortunately, this requires that you know service times, which are often impossible to obtain. You can measure total response time, but that includes waiting time in the queue, so it’s not the same thing as the service time. The utilization is also often deceptive, because the real utilization of the resources you’re trying to model can be difficult to measure correctly too. Most people I know consider the Erlang approach to be difficult to apply.

If load tests, benchmarks, and queuing theory are difficult to use, can the USL help? Yes, it can. Because the USL is a model, it can help you predict how a system will perform under load beyond what you can observe. The USL’s point of maximum predicts the system’s maximum throughput, so it’s a way to assess a system’s capacity. It can help you get a better idea of how close you are to the system’s maximum capacity.

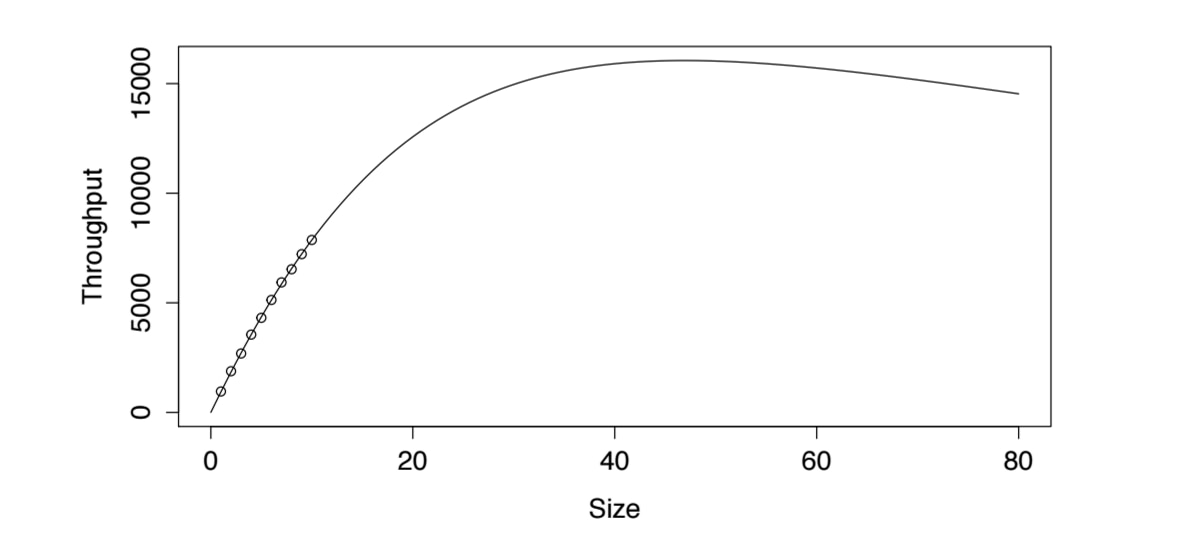

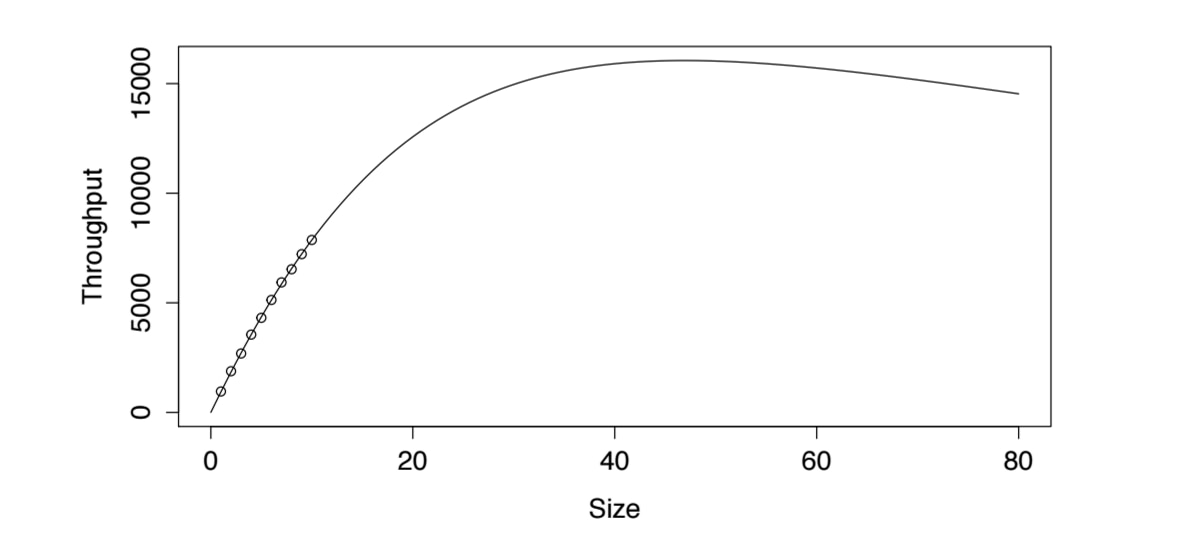

Here’s an example. Imagine that I had measured the first 10 data points in

this benchmark (run on a Cisco server by Vadim Tckachenko), in a live production environment serving real users, not a lab. Here’s the result of fitting the USL to the data:

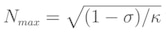

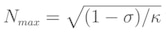

Using this formula (which is used to find the maximum value of a system when the USL function κ coefficient has a nonzero value)

the USL predicts a max of 16,049 queries per second at a concurrency of 46 threads.

I have a rule of thumb for using the USL to project out into the unknown. I’ve seen so many systems that appear to be scaling beautifully—fitting the USL cleanly, just as this one does—and then they hit rough waters, that I don’t trust anything farther out than twice the measured throughput or twice the measured size, whichever comes first.1 And that’s if I’m not seeing telltale signs of leveling off or retrograde throughput. If I see those signs, I lower my expectations accordingly. I will also include other information such as CPU utilization to guide my estimates, if I have it, but in the absence of more data this is a good way to keep expectations capped.

Back to the model: I have measurements only to N = 10, where observed throughput is 7,867, so I’m going to compute the predicted throughput at N = 20. The result is a throughput forecast of 12,572. This is less than twice my maximum observed throughput, so I’ll allow it. In my experience, it’s an optimistic but not unrealistic guess that I won’t get more than about 12,500 queries per second from this server. (As you may remember, this system topped out at 12,211 QPS.)

The outcome is that my system appears to be operating at about half of its maximum capacity. However, as discussed previously, maximum throughput isn’t maximum usable capacity. Again, when the system is at its maximum throughput, response time is probably terrible, and will be extremely inconsistent. That’s why it’s more important to focus on the system’s maximum throughput within the constraints of a service level objective.

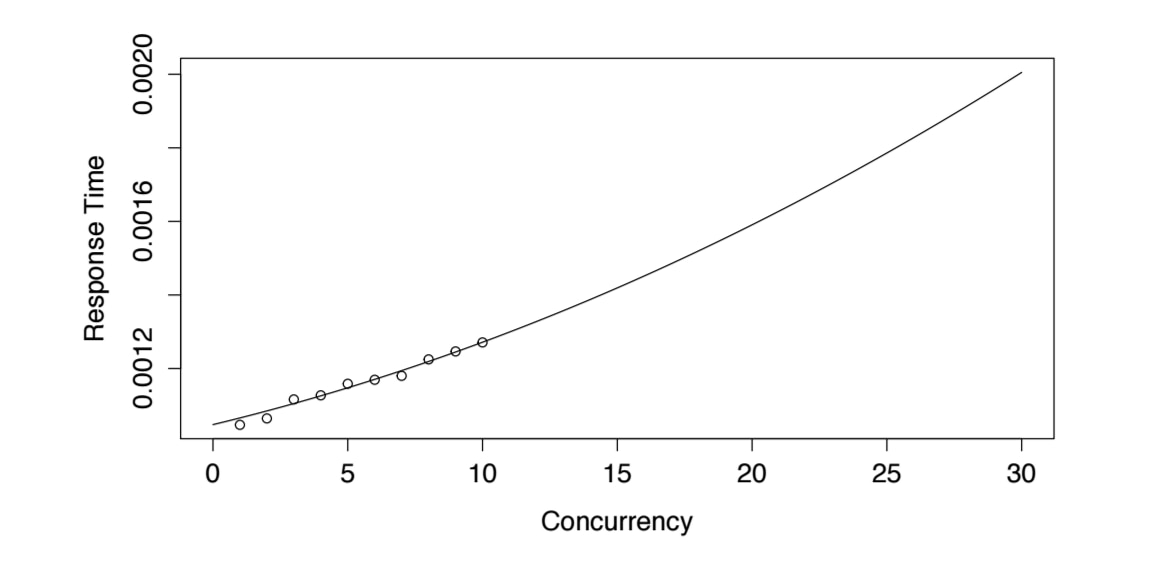

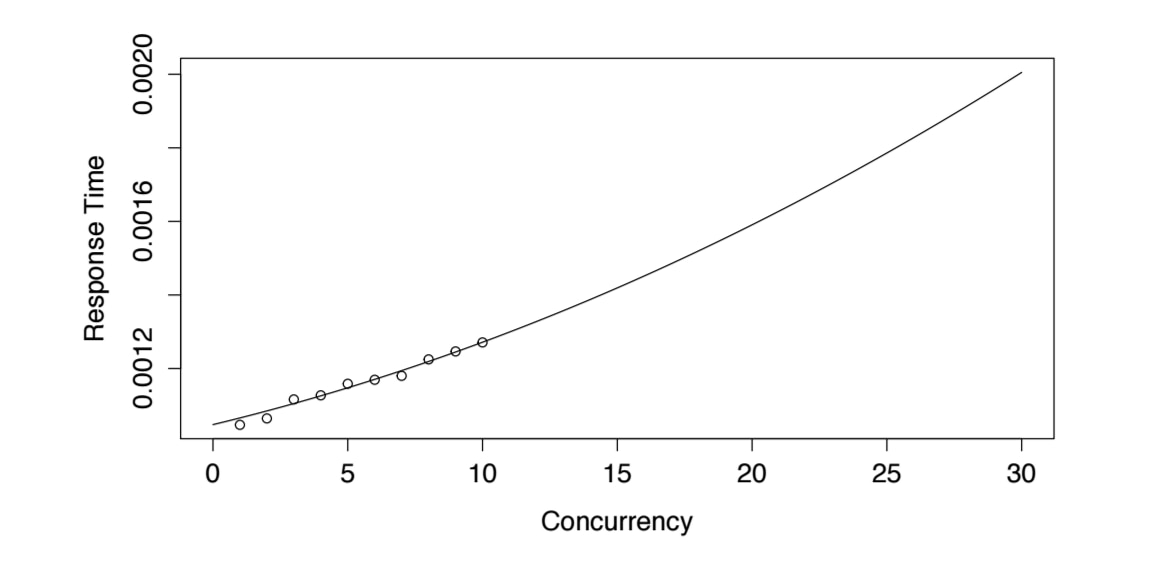

As a first step towards this, I can use average latency to help understand the potential QoS end-users will get from this server. Again using the rearranged form of the USL, I obtain the following response time forecast:

Using the estimated coefficients and the formula for response time from Equation 6, I can predict a mean response time of 0.00159 seconds at 20 threads. Let’s imagine that this is unacceptable; I need mean response times to be 1.5ms or less. Solving the response time equation as a function of latency lets me use it to compute the maximum usable concurrency. The resulting equation has two roots; I’m only interested in the positive one:

Plugging in an R target of 0.0015 yields N = 17, so if I want to avoid violating my SLO I can’t drive my server higher than approximately 11,450 QPS. I’m actually at about two-thirds of my usable capacity, not half. I can grow traffic about 145% before I get into trouble, if I’m lucky.

This process is something like what I might use if I were encountering this server in the wild. It’s not perfect; as Niels Bohr said, “It’s hard to make predictions, especially about the future.” Despite the uncertainty that remains, this approach is much better than staring at a chart and thinking, “I don’t know, it looks like it’s scaling linearly and CPU utilization is only 10%, so I guess we have a lot of headroom?” You usually have less headroom than you think, because of how non-linearly throughput and latency degrade.

The USL has a few nice properties that make it suitable for this type of capacity planning:

- It’s a “black box” technique, which uses data that’s usually easy to get.

- Gathering data and using regression to analyze it is also easy.

- The USL is a relatively simple model, so people like me can understand the math.

- The USL is highly intuitive in comparison to most other approaches.

I would just repeat my caution that a lot of systems perform worse than the USL predicts they will, because their degradation in scalability at larger sizes is more severe than predicted. This is why I suggest viewing the USL’s prediction as optimistic: “I won’t count on being able to scale this system as high as the USL predicts I can.”

You can also combine the USL with selected techniques from queuing theory, such as the Square Root Staffing Rule, to forecast how much capacity is needed and what quality of service it will provide.

Using this formula (which is used to find the maximum value of a system when the USL function κ coefficient has a nonzero value)

Using this formula (which is used to find the maximum value of a system when the USL function κ coefficient has a nonzero value)

the USL predicts a max of 16,049 queries per second at a concurrency of 46 threads.

I have a rule of thumb for using the USL to project out into the unknown. I’ve seen so many systems that appear to be scaling beautifully—fitting the USL cleanly, just as this one does—and then they hit rough waters, that I don’t trust anything farther out than twice the measured throughput or twice the measured size, whichever comes first.1 And that’s if I’m not seeing telltale signs of leveling off or retrograde throughput. If I see those signs, I lower my expectations accordingly. I will also include other information such as CPU utilization to guide my estimates, if I have it, but in the absence of more data this is a good way to keep expectations capped.

Back to the model: I have measurements only to N = 10, where observed throughput is 7,867, so I’m going to compute the predicted throughput at N = 20. The result is a throughput forecast of 12,572. This is less than twice my maximum observed throughput, so I’ll allow it. In my experience, it’s an optimistic but not unrealistic guess that I won’t get more than about 12,500 queries per second from this server. (As you may remember, this system topped out at 12,211 QPS.)

The outcome is that my system appears to be operating at about half of its maximum capacity. However, as discussed previously, maximum throughput isn’t maximum usable capacity. Again, when the system is at its maximum throughput, response time is probably terrible, and will be extremely inconsistent. That’s why it’s more important to focus on the system’s maximum throughput within the constraints of a service level objective.

As a first step towards this, I can use average latency to help understand the potential QoS end-users will get from this server. Again using the rearranged form of the USL, I obtain the following response time forecast:

the USL predicts a max of 16,049 queries per second at a concurrency of 46 threads.

I have a rule of thumb for using the USL to project out into the unknown. I’ve seen so many systems that appear to be scaling beautifully—fitting the USL cleanly, just as this one does—and then they hit rough waters, that I don’t trust anything farther out than twice the measured throughput or twice the measured size, whichever comes first.1 And that’s if I’m not seeing telltale signs of leveling off or retrograde throughput. If I see those signs, I lower my expectations accordingly. I will also include other information such as CPU utilization to guide my estimates, if I have it, but in the absence of more data this is a good way to keep expectations capped.

Back to the model: I have measurements only to N = 10, where observed throughput is 7,867, so I’m going to compute the predicted throughput at N = 20. The result is a throughput forecast of 12,572. This is less than twice my maximum observed throughput, so I’ll allow it. In my experience, it’s an optimistic but not unrealistic guess that I won’t get more than about 12,500 queries per second from this server. (As you may remember, this system topped out at 12,211 QPS.)

The outcome is that my system appears to be operating at about half of its maximum capacity. However, as discussed previously, maximum throughput isn’t maximum usable capacity. Again, when the system is at its maximum throughput, response time is probably terrible, and will be extremely inconsistent. That’s why it’s more important to focus on the system’s maximum throughput within the constraints of a service level objective.

As a first step towards this, I can use average latency to help understand the potential QoS end-users will get from this server. Again using the rearranged form of the USL, I obtain the following response time forecast:

Using the estimated coefficients and the formula for response time from Equation 6, I can predict a mean response time of 0.00159 seconds at 20 threads. Let’s imagine that this is unacceptable; I need mean response times to be 1.5ms or less. Solving the response time equation as a function of latency lets me use it to compute the maximum usable concurrency. The resulting equation has two roots; I’m only interested in the positive one:

Using the estimated coefficients and the formula for response time from Equation 6, I can predict a mean response time of 0.00159 seconds at 20 threads. Let’s imagine that this is unacceptable; I need mean response times to be 1.5ms or less. Solving the response time equation as a function of latency lets me use it to compute the maximum usable concurrency. The resulting equation has two roots; I’m only interested in the positive one:

Plugging in an R target of 0.0015 yields N = 17, so if I want to avoid violating my SLO I can’t drive my server higher than approximately 11,450 QPS. I’m actually at about two-thirds of my usable capacity, not half. I can grow traffic about 145% before I get into trouble, if I’m lucky.

This process is something like what I might use if I were encountering this server in the wild. It’s not perfect; as Niels Bohr said, “It’s hard to make predictions, especially about the future.” Despite the uncertainty that remains, this approach is much better than staring at a chart and thinking, “I don’t know, it looks like it’s scaling linearly and CPU utilization is only 10%, so I guess we have a lot of headroom?” You usually have less headroom than you think, because of how non-linearly throughput and latency degrade.

The USL has a few nice properties that make it suitable for this type of capacity planning:

Plugging in an R target of 0.0015 yields N = 17, so if I want to avoid violating my SLO I can’t drive my server higher than approximately 11,450 QPS. I’m actually at about two-thirds of my usable capacity, not half. I can grow traffic about 145% before I get into trouble, if I’m lucky.

This process is something like what I might use if I were encountering this server in the wild. It’s not perfect; as Niels Bohr said, “It’s hard to make predictions, especially about the future.” Despite the uncertainty that remains, this approach is much better than staring at a chart and thinking, “I don’t know, it looks like it’s scaling linearly and CPU utilization is only 10%, so I guess we have a lot of headroom?” You usually have less headroom than you think, because of how non-linearly throughput and latency degrade.

The USL has a few nice properties that make it suitable for this type of capacity planning: