Containers have been the buzz among developers in recent years with the adoption of cloud-native orchestration tools like Kubernetes and

DevOps workflows centered around containers.

At the same time, virtual machines (VMs) still power many enterprise workloads, whether they’re running in a public cloud provider like Azure or an on-premises data center running VMware.

In one of my early jobs, we built a private cloud—in 2012. This was a ground-breaking project at the time. One of the significant challenges we ran into was having copies of installed operating system images due to the size of those images.

You need to maintain those images (for things like different operating system patches) and move them around to multiple data centers. This consumes both time and network bandwidth. As it turned out, I wasn’t the only architect with those problems.

So, while containers and VMs do many of the same things, they do many things differently, too. Understanding the differences between these virtualization technologies can help you determine which type to use for a given situation.

The Origin of Containers

Containers are not a new concept—isolation was part of the UNIX operating system in the 1970s, and Solaris Containers allowed multiple workloads to share a physical server in the early 2000s.

Containers really took off, however, with the introduction of Docker in 2013. Because of the maturity of virtualization hypervisors like VMware and Hyper-V, it wasn’t a large leap for developers and administrators to understand and, therefore, quickly embrace containerization.

What is the Difference Between a Container and a VM?

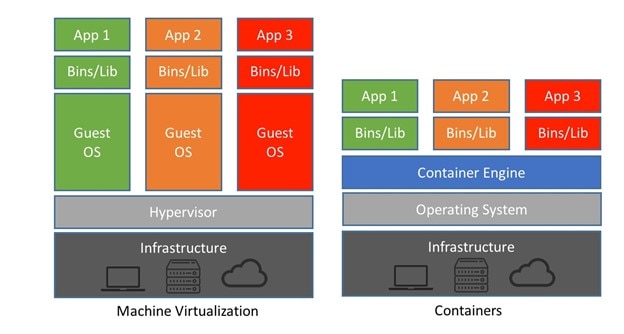

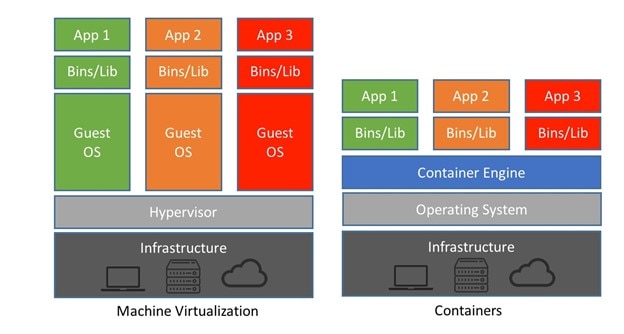

Container technology is similar to a VM, with one significant difference—a VM contains a complete copy of the operating system separate from the underlying hardware, while a container shares an OS kernel with the host operating system (regardless if the host is bare metal physical or virtual).

This means a container image is orders of magnitude smaller than a VM image, increasing its portability. From a developer’s perspective, if the application works on their desktop, it will also work in production because all application dependencies are within the container.

A container has only the binaries and libraries for the app built into it. This means that in all cases, a running container will have a single running process (with a process ID of 1)—the application running in the container. The container shares the host operating system’s kernel and hardware resources with any other containers running on the host. See

Figure 1.

Figure 1. Architectural differences between virtual machines and containers.

When To Use Containers vs. VMs

The early use cases for containers were stateless workloads like web servers without requirements for persistent data. This evolved with the introduction of runtimes like Docker and cloud-native applications like Kubernetes. Containers became more robust and could be used for persistent storage applications like Microsoft and Oracle databases.

Today, storage and networking functionality is built into container orchestration platforms like Kubernetes. Kubernetes was created by Google and made into an open-source project.

In much the same way Docker containers exploded in popularity because of ease of use for developers, Kubernetes’ rapid growth is a result of functionality for

microservices architecture and the ability to easily deploy and consume containers via cloud providers like AWS and Microsoft Azure.

In the early days of Kubernetes, building a cluster was a complex undertaking. But with the advent of the cloud Kubernetes ecosystem, the process of building a cluster is as simple as executing a line of code. Beyond deployment, Kubernetes also manages hardware resources like CPU and memory. It’s fully API-driven, making it easy to develop against.

Within Kubernetes, you must define a container runtime within the cluster. Most commonly, this runtime is Docker, but many organizations use other runtimes like containerd and CRI-O.

Another important concept for containers is the container registry. Like an open-source package management solution like apt-get or yum, container registries are libraries of container images. Those container images contain many different applications and are integrated into CI/CD workflows as part of an automated build process. These registries can be private or public, depending on your requirements. The cloud providers also offer the ability to host your own container registry, whether public or private.

What is the Benefit of Using a VM?

While containers have become massively popular among developers and DevOps teams in recent years, VMs still represent most workloads—Gartner suggests that spend on infrastructure as a service (IaaS) components like VMs make up a quarter of public cloud spend.

Many

legacy applications don’t support running on containers because they were written in monolithic application environments, which depend on multiple processes running for the application to be successful. This makes them better for VMs, which offer a higher level of isolation.

There are also security concerns as well with containers. There are several known container vulnerabilities, and those container exploits can exploit other containers since isolation isn’t as complete as it is in a VM—if a VM is breached from the host, the scope of the exploit is typically isolated to the VM itself.

Is a Container or VM Better?

That said, containers are here, and they aren’t going away. They’ve improved the software development lifecycle by simplifying deployment workflows, reducing maintenance overhead, and offering near-infinite scalability options.

In this aspect, they’re like VMs, which fundamentally changed IT, enabling the automation of cloud infrastructure crucial to creating the public cloud and supporting multi-cloud environments. Most organizations have both containers and VMs (in fact, many container orchestration platforms like Kubernetes are often hosted on VMs). This makes sense since most environments support a wide variety of applications.

As containers have matured, many organizations have started using them for database servers, like Microsoft SQL Server. Beginning with SQL Server 2017, the popular Microsoft database engine has been available on Docker and Kubernetes. Many organizations use Docker for local database development and Kubernetes for production workloads since it supports high availability and has more robust persistent storage options.

Since databases are at the heart of your applications, their performance is critical. Identifying those database performance issues can be challenging for the best of DBAs, which is why so many organizations turn to SolarWinds. You can

learn more about how SolarWinds® SQL Sentry can help you more easily manage database containers and keep them running smoothly.

For more resources about monitoring container technology, check out this blog post about

monitoring SQL Server on Linux containers or watch this comprehensive

guide to SQL Server on Docker containers.