Over the past several years, I've helped numerous customers migrate SQL Server workloads to Azure SQL, including Azure SQL Database, Azure SQL Managed Instance, and Azure SQL Virtual Machines.

In this article, I'll explain some of the challenges of optimizing the compute cost for an Azure SQL Database deployment and review how the serverless compute tier can greatly simplify it.

Azure SQL Database Pricing: DTU vs. vCore

Azure SQL deployments have become very popular due to the security and stability of Azure. With Azure SQL Database, you get to take advantage of the Microsoft Platform as a Service (PaaS) environment with managed backups, a Service Level Agreement (SLA) of 99.99%, Transparent Data Encryption enabled by default, autotuning capabilities, and much more.

Azure SQL Database is built on a database-scoped programming model, which means Azure SQL Database does not support cross-database queries. While this may seem like a limitation, it’s really one of the top features. For applications where you have a single database for the application, this environment works extremely well. Software as a Service (SaaS) organizations benefit tremendously from Azure SQL Database because, by design, one database can't talk to another database. When you need to limit customers to their own database, Azure SQL Database can make it easy.

Azure SQL Database is available in two different purchasing models, with each having various service tiers and pricing models:

- DTU purchasing model: This offers a Basic tier for less demanding workloads, Standard tier for those workloads with typical performance requirements, and the Premium tier for IO-intensive workloads, which is where most business-critical environments end up.

- vCore-based (virtual core) purchasing model: This provides the General Purpose tier for scalable compute and storage options, Hyperscale for on-demand scalable storage, and the Business Critical tier for high transaction rate and high resiliency.

Important Considerations When Optimizing Azure Compute Cost

One of the challenges I've faced when migrating customers from on-premises to Azure SQL Database is configuring the appropriate compute resources considering cost. Azure gives us the ability to scale our compute size up and down with minimal impact to our customers (note how I wrote “minimal impact”). I've scaled databases in the middle of a workday under heavy load and had no impact, and at other times there has been a quick disruption where the customer's application would have to reconnect and then was fine. The time to scale all depends on the size you're scaling up to and if those resources are available on the host your Azure SQL Database is currently on.

A common scenario for customers is when we end up sizing the compute for their peak performance needs. For example, if eight vCores handle their peak workload, we would size for eight vCores, which is what they would be paying for, 24 hours a day, 365 days a year.

Now consider this: how many hours during a typical workday is the client at peak workload? Most likely not all 24 hours. Typical customers would be anywhere from 4-6 hours unless they’re a 24/7 online business servicing clients worldwide. If they’re a typical business serving customers during daytime hours only, they might conclude they're overpaying for compute during non-peak hours. A lot feel this way due to how easy it is to scale up and down. To address this, many will create an automated process to scale their compute level prior to their business day and then scale back down after hours.

Being able to schedule scaling up and down at specific times is a useful optimization to help organizations decrease their overall compute cost for individual databases. However, in many cases I've observed, even when scaling up to a higher number of vCores, the higher utilization would still only be for a portion of the time during which you’re scaled up at the higher tier. Since manually scaling a database has a small chance of causing a disruption, organizations would err on the side of caution and scale before and after normal business hours.

Let's consider an example of a business whose typical busy time is 7 a.m. to 7 p.m. They would scale from two vCores to eight vCores at 7 a.m. and then scale back down to two vCores at 7 p.m. Throughout the day, they would run 40-50% CPU and hit 80% for approximately two hours during their peak time. This was still a much better option for many use cases than paying for eight vCores 24 hours a day and was the only option prior to

Microsoft announcing a serverless tier.

What Is Azure SQL Serverless Compute Tier?

With the serverless tier (only available in the General Purpose vCore model), Azure customers can select the minimum and maximum vCores to use within a selectable scale. Currently, the lowest vCore option is 0.5 vCores, coupled with a max vCores of four. At the other end of the scale, you can have a max of 40 vCores coupled with a minimum of five. The available scale options are:

vCore Min vCore Max Min Mem Max Memory

0.5 4 2 12

0.75 6 2.25 18

1 8 3 24

1.25 10 3.75 30

1.5 12 4.5 36

2 16 6 48

2.25 18 7.5 60

3 24 9 72

4 32 12 96

5 40 15 120

As you can see from the chart above, when the workload scales up to the max number of vCores, the available memory also scales up, allowing for more data to reside in the buffer pool (the in-memory cache of data pages).

On the other hand, memory is reduced as the workload demand decreases and the number of vCores auto-scales down. This will force a cache reduction to evict data pages from memory using the same selection policy as for provisioned compute databases when memory pressure is high. This typically isn't an issue since the event causing the cache reduction is a reduction in overall workload demand.

How to Configure Azure SQL Serverless

To configure your Max vCores and Min vCores, use the slider to make your selection once you select the serverless compute tier in the Azure portal, as shown below:

Of course, you can also use PowerShell, T-SQL through SSMS, and other management APIs instead of the Azure portal.

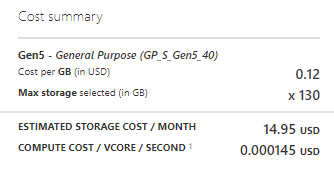

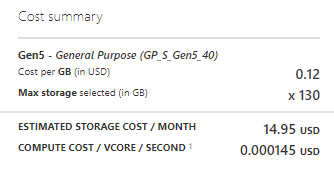

The serverless tier separates compute cost from storage cost and breaks the compute cost down to vCore per second. In the East US, my storage cost is $0.12 per GB and my compute cost is $0.000145 per vCore per second. With a 100GB data maximum size, my transaction log space allocated would be 30GB. The cost summary is below:

In my example, compute cost will be calculated based on my compute usage between my minimum vCores of five and maximum vCores of 40. Having the ability to auto-scale up from five vCores to 40 vCores when the workload calls for it is a huge advantage and can save the organization a great deal of compute cost rather than allocating 40 vCores the entire time. A lot of my customers have databases with random workloads and don't have predictable usage patterns. Some days, the databases may not be used at all, then at other times used heavily, so the serverless tier is ideal for them.

To see metrics on CPU billing, you can go into Metrics and select the metric “App CPU billed.” This should correspond with your compute utilization report for CPU percentage.

When and How to Use the Auto-Pause Option

For databases routinely having no activity, Microsoft now gives us the option to auto-pause the database. When the database is paused, you do not incur any compute cost, but storage cost still applies. Once you enable the auto-pause feature, you must specify the auto-pause delay, meaning how long the database needs to be inactive before it’s paused. You must specify a time between one hour and seven days, as shown below:

The database will automatically resume when database activity recurs. Enabling this feature can lead to huge cost savings for an organization, especially for seldom-used databases.

One challenge with leveraging the auto-pause feature is, on occasion, the first database connection to trigger the database resuming may timeout due to the latency. The second connection happens without any issue. To combat this problem for databases with predictable patterns of usage, customers have set up an automated task to query the database at a specific time of day to ensure the database is ready for use by their end users. For applications built to retry requests before timing out, this slight delay for the database to resume may not be an issue.

Benefits of the Azure Serverless Compute Tier

Microsoft Azure SQL Database is an excellent choice when you need a database per application or database per customer, or if you’re looking for a managed platform to host your databases. Azure SQL Database offers lots of features and functionality along with high SLAs for resiliency. Microsoft provides multiple compute and service tiers to pick from depending on your IO and workload needs, with multiple ways to help their customers save on compute cost by providing the serverless tier.

With the serverless tier, you can auto-scale compute up and down as your workload demands change and pause the database when there is no activity by essentially turning off compute cost. Make sure you carefully consider whether this option can save your organization compute cost compared to a provisioned compute tier.

When it comes to delivering value by reducing costs, regularly evaluating what you

spend in your Azure DevOps estate can be beneficial.

SolarWinds® SQL Sentry® can also help you more easily

monitor Azure SQL database performance by providing a centralized view across your environment, so you can quickly identify cost-effective resource allocation and other optimization opportunities.

To learn more about

what serverless architecture is, read this post from Head Geek™ Chrystal Taylor.

Of course, you can also use PowerShell, T-SQL through SSMS, and other management APIs instead of the Azure portal.

The serverless tier separates compute cost from storage cost and breaks the compute cost down to vCore per second. In the East US, my storage cost is $0.12 per GB and my compute cost is $0.000145 per vCore per second. With a 100GB data maximum size, my transaction log space allocated would be 30GB. The cost summary is below:

Of course, you can also use PowerShell, T-SQL through SSMS, and other management APIs instead of the Azure portal.

The serverless tier separates compute cost from storage cost and breaks the compute cost down to vCore per second. In the East US, my storage cost is $0.12 per GB and my compute cost is $0.000145 per vCore per second. With a 100GB data maximum size, my transaction log space allocated would be 30GB. The cost summary is below:

In my example, compute cost will be calculated based on my compute usage between my minimum vCores of five and maximum vCores of 40. Having the ability to auto-scale up from five vCores to 40 vCores when the workload calls for it is a huge advantage and can save the organization a great deal of compute cost rather than allocating 40 vCores the entire time. A lot of my customers have databases with random workloads and don't have predictable usage patterns. Some days, the databases may not be used at all, then at other times used heavily, so the serverless tier is ideal for them.

To see metrics on CPU billing, you can go into Metrics and select the metric “App CPU billed.” This should correspond with your compute utilization report for CPU percentage.

In my example, compute cost will be calculated based on my compute usage between my minimum vCores of five and maximum vCores of 40. Having the ability to auto-scale up from five vCores to 40 vCores when the workload calls for it is a huge advantage and can save the organization a great deal of compute cost rather than allocating 40 vCores the entire time. A lot of my customers have databases with random workloads and don't have predictable usage patterns. Some days, the databases may not be used at all, then at other times used heavily, so the serverless tier is ideal for them.

To see metrics on CPU billing, you can go into Metrics and select the metric “App CPU billed.” This should correspond with your compute utilization report for CPU percentage.

The database will automatically resume when database activity recurs. Enabling this feature can lead to huge cost savings for an organization, especially for seldom-used databases.

One challenge with leveraging the auto-pause feature is, on occasion, the first database connection to trigger the database resuming may timeout due to the latency. The second connection happens without any issue. To combat this problem for databases with predictable patterns of usage, customers have set up an automated task to query the database at a specific time of day to ensure the database is ready for use by their end users. For applications built to retry requests before timing out, this slight delay for the database to resume may not be an issue.

The database will automatically resume when database activity recurs. Enabling this feature can lead to huge cost savings for an organization, especially for seldom-used databases.

One challenge with leveraging the auto-pause feature is, on occasion, the first database connection to trigger the database resuming may timeout due to the latency. The second connection happens without any issue. To combat this problem for databases with predictable patterns of usage, customers have set up an automated task to query the database at a specific time of day to ensure the database is ready for use by their end users. For applications built to retry requests before timing out, this slight delay for the database to resume may not be an issue.