Representative sampling turns out to be very hard to do well, which is what our newest ebook, Sampling a Stream of Events With a Probabilistic Sketch, is about. While that book explains the overall method for how we achieved representative sampling and good efficiency, this blog post first looks at our foundational requirements. These requirements might not match exactly what you and your organization want, but it’s what we decided SolarWinds DPM needed, and they've proven effective.

Representative sampling turns out to be very hard to do well, which is what our newest ebook, Sampling a Stream of Events With a Probabilistic Sketch, is about. While that book explains the overall method for how we achieved representative sampling and good efficiency, this blog post first looks at our foundational requirements. These requirements might not match exactly what you and your organization want, but it’s what we decided SolarWinds DPM needed, and they've proven effective.

Representative Sampling. With some caveats and exceptions we’ll discuss next, we wanted our sample of events to be broadly representative of the original population of events in the stream.

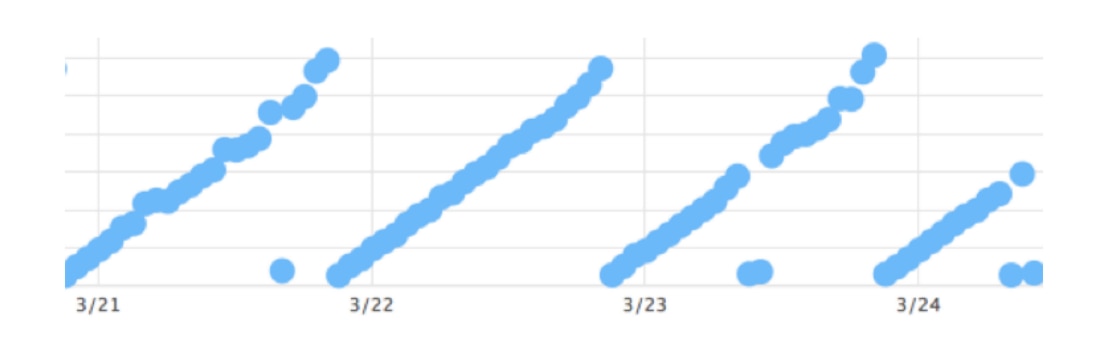

Sampling Rate. We should sample events from each category of events in the stream at a rate that allows a user to drill into smallish time intervals and find samples of interest to examine. If samples are taken too rarely, there won’t be any example queries to study. If too frequently, it will cause undue strain on resources.

Bias Towards Importance. Some events really are more important than others. Requests with unusually high latency, or requests that cause errors, for example, are more important to capture and retain than the general population. This enables troubleshooting of one-in-a-million events that would otherwise be missed. These unusual or unlikely events are disproportionately often the cause of serious problems, so capturing them for inspection is the difference between a dead-end diagnosis effort and a correct solution.

Flexible Customization. Our customers require various special-case behaviors for query sampling. These include blacklisting, whitelisting, avoiding the capture of sensitive or private data, and guaranteeing the capture of specific types of samples for debugging or auditing purposes. These features have been the key to solving bizarre and frustrating problems, capturing bugs that no other monitoring systems were able to surface, investigating unauthorized activity, and the like.

In addition to these user-driven requirements, there were several implementation and technical requirements we needed to satisfy. Some of these are at odds with each other or with user requirements:Balancing Sampling Rates. It’s hard to balance global and per-category sampling rates across very different types of event streams. For example, some categories of events are high-frequency, but others are very rarely seen. If sampling were strictly representative, we’d capture more samples of the high-frequency streams, which we don’t want because it could starve low-frequency streams, meaning we wouldn’t have any samples from them. And for purposes of limiting the overall rate of sampling across all streams, we need individual streams to be sampled such that we don’t overload anything in the aggregate. But in edge-case scenarios where we have unusually large numbers of categories (many millions for some customers), this becomes difficult to achieve.

Sampling Bias Versus Rate Limits. It’s tricky to balance the bias towards important events with the per-category and global rate limits. If we over-bias towards important events, in some scenarios we can sample so fast that we starve events not flagged as important. The same consideration applies to customer-defined sampling criteria.

Correctness, Efficiency, and Implementation. Balancing correctness and efficiency with ease of implementation and maintenance is hard. Some of the techniques we found or invented were difficult to implement, debug, or maintain. Some were computationally inefficient. Our new ebook presents the results of our third or fourth generation of sampling algorithms.

Taken altogether, these requirements serve as guidelines and a strong basis for selecting effective samples from an event stream. The information here has been excerpted from a chapter out of our newest, free ebook, Sampling a Stream of Events With a Probabilistic Sketch.