Addressing a Problematic MySQL Query and its Effects on Amazon Aurora

May 6, 2016 |

Database

Since we announced our support for Amazon Web Service's Aurora last year, we've had great experiences working with customers who use Aurora as their database system of choice. And not only is Amazon's RDS excellent, so is their team and support.

Recently, we encountered a MySQL bug that was, in turn, having effects on Amazon’s RDS. The MySQL issue involved a problematic query that caused Aurora instances to crash under load; upon discovering this issue, we had the opportunity to work with AWS to identify the error in MySQL and fix it, so that it would avoid an impact on AWS’s systems. It turned out that the query itself posed a potential problem for all monitoring services -- not just SolarWinds® Database Performance Monitor (DPM); fortunately, however, once AWS addresses the bug, the problem will be fixed for all monitoring products that might come across it, in regards to Aurora. Here's some background on the issue and how we addressed it.

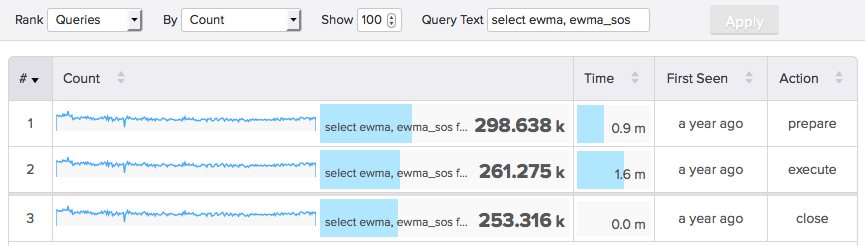

Just as with all database technologies we support, SolarWinds DPM gets metrics about MySQL from many places, including network sniffing and a normal connection to MySQL itself. Sniffing is our preferred method for reading queries, due to the richer information in the protocol. However, we can always resort to PERFORMANCE_SCHEMA for query metrics if sniffing is not an option in a customer's setup. (This is the case for systems using an Amazon RDS instance, for example.) Regardless of query metrics' source, our agents always open a connection to MySQL. There's plenty of information that we can fetch at one-second resolution, including all metrics populating the MySQL and InnoDB charts in the UI.

SHOW PROCESSLIST is among the items we check for some useful bits of data (unlike many monitoring tools, “polling PROCESSLIST” is not the primary way we monitor queries), although we may use a variety of means to get that particular data. There's at least a couple of equivalent alternatives: using tables in PERFORMANCE_SCHEMA or INFORMATION_SCHEMA. Our agent will automatically choose the one with the smallest impact on your server. We’re very careful about how we do this, because we’ve had a lot of experience with monitoring tools that indiscriminately look at such information and cause server performance problems.

While looking at queries from PROCESSLIST, the agent looks for evidence of specific types of troublesome queries, then collects additional metrics from other sources to help diagnose. One of those is metrics on transactions that are blocked by the queries of interest. In other words, we’re not just blindly polling the transaction lock wait table, adding needless overhead -- the PROCESSLIST guides us to the queries of interest in the InnoDB lock wait tables, which is what we really care about in this case.

The query we use to get this is:

SELECT it.trx_mysql_thread_id, count(*)

FROM information_schema.innodb_trx AS it

JOIN information_schema.innodb_lock_waits AS il ON il.blocking_trx_id = it.trx_id

WHERE it.trx_requested_lock_id IS NULL

AND it.trx_started < (now() - INTERVAL 1 SECOND)

AND it.trx_mysql_thread_id IN (...)

GROUP BY it.trx_mysql_thread_id

ORDER BY NULL;

(In case you're curious, the ORDER BY NULL clause asks MySQL not to bother about sorting the result after grouping.)

The values we get from this contribute to the mysql.processlist.query.<id>.lock and mysql.totals.processlist.query.lock metrics.

But it turns out that this query, within MySQL, was crashing Aurora itself. Discovering this behavior and fact was key to establishing an effective way to avoid such problematic crashes: as a workaround, our agents are now set-up to detect Aurora and, if found, to disable this query, to avoid the crash. Otherwise, all query metrics are available as usual.

So, in the end, the query has been documented and we have a straightforward workaround; it's now safe to resume monitoring. Thanks again to AWS for collaborating with us to develop this fix and for providing such a great product.