Today's guest post is brought to you by

Mikhail Panchenko from the Opsmatic team. Thank you for sharing, and we hope others find it helpful!

A Real Life Database Upgrade Story

This post recounts a recent Cassandra 1.2 to 2.0 upgrade performed by the Opsmatic team. It’s a fairly complete narrative of the thought process, research, and preparation that was involved. We hope that it helps others about to embark on the same terrifying journey. I've also included some details about our environment where it was germane to the upgrade.

The story is a boring one with a happy ending, but it’s precisely the sort of boring you WANT when operating a production system. Database upgrades are very high risk changes, as both the upgrade and the rollback often involve modifying data. The only way to have a boring database upgrade is to invest in the preparation.

Some Background

Cassandra is a distributed database which promises, among other things, high availability and data reliability. Over the years, it has gotten quite good at keeping these promises. (Of course, there’s always plenty of

work to do.) The main practical advantages of using such a system are operationally transparent replication and failover. Configure your schemas, set your replication factors, throw your data at it and it’ll take care of redundancy, failover, recovery, etc. I bought into the promise of

Dynamo-like systems early on; I can’t say that I miss configuring binary logs and replication. That’s not to say there aren’t plenty of new knobs to worry about in exchange.

In our our experience with Cassandra 1.2 to this point, it had lived up to much of the hype. We’ve completely lost nodes to the Angry Gods of EC2 and been able to recover with very little operational effort. Of course, I still have plenty of The Fear left from my own days with much earlier versions of Cassandra and RDBMS scaling before that. Databases are not to be trifled with.

Why Upgrade?

We asked ourselves “Is the upgrade risk worth it?” a whole bunch of times over the past year or more, and only recently accumulated a long enough list of benefits to justify the effort. Upgrading a database just for the sake of it is never a good use of time. Be sure the benefits outweigh the risks before going down that path. That we were upgrading to the 2.0 branch a full 13 minor releases in also helped to improve the risk/reward ratio.

Expectations

Best Case Scenario: the upgrade goes exactly as planned; everything is as backwards compatible as it’s supposed to be; we wake up one morning to the smell of a freshly baked pie and a totally stable Cassandra 2.x cluster.

Worst Case Scenario: the upgrade encounters some previously undiscovered bug; all the most critical data gets corrupted on the first node we try to upgrade and then replicated to its peers; we have to restore backups and try to replay traffic to cover the gap; the app is probably down for a significant amount of time; the team becomes irritable and starts to neglect personal hygiene while dealing with the issue, causing everyones’ SOs to leave. (This is what an “exciting” upgrade looks like.)

Realistic Forecast: the upgrade works like it’s supposed to; we mess up some configuration parameters in the cookbook migration, causing sub-optimal performance post-upgrade; we forget to update the configuration of one of our services, causing it to briefly not have a database to talk to; the upgrade process causes some unexpected load for which we don’t have enough resources, causing a trickle of errors during the process.

Fortunately, we were aware of several other teams’ successful upgrades. We could see that many issues related specifically to the upgrade process were resolved in minor releases since 2.0, so we felt pretty good about that forecast.

Preparation

We never do anything in production that we haven’t tried in staging. It’s not always possible to test everything at the same scale in the staging environment, but every process and every compatibility question is verified there first. Furthermore, major cluster operations such as repairs

cannot be performed on a mixed cluster. That means the production upgrade had to be completed on all nodes in <10 total days so as to not miss any scheduled repairs. We wouldn’t start the process until we were reasonably confident it wouldn’t be derailed by something we could have addressed in advance.

Our staging cluster is a much smaller, functionally identical copy of production. So, let’s just follow the upgrade instructions, right? Cool! Well, the meat of the official instructions is:

* stop the node

* backup your configs

* update the package

* configure things to your liking

* start the node

Easy, right?! Here I am, more than a thousand words into a post about a 5 step process! Of course, the reality is that simple only for most trivial installations.

Migrating Chef Cookbooks

Because we use Chef to manage our infrastructure, “update the package” is really “update the cookbook which deploys the package.” Same for the configuration step. On top of potential upgrade issues pertaining to the software itself, we now have to worry about issues with the cookbook versions. Cassandra 2.x introduced some new configuration parameters and retired some old ones, meaning the configuration files our cookbook creates are not 100% compatible.

Our case was complicated further by the fact that we were moving from a home-grown cookbook to a

community one. We were completely on our own for this part of the process. Having our own service handy did help inspire additional confidence. We were able to track the key configuration parameters in

Opsmatic itself and diff the upgraded nodes to the downgraded nodes. Nonetheless, it was a change with a huge surface area, and thus a huge risk.

Client Compatibility: A Detour

Cassandra 2.0 is backwards compatible with the 1.0 protocol (an under-documented fact that we joyfully discovered late in our research, thanks to

this mailing list thread). However, we still wanted to be able to test all our services against the new version incrementally. We could start with the least risky/impactful services and verify that everything was working as expected.

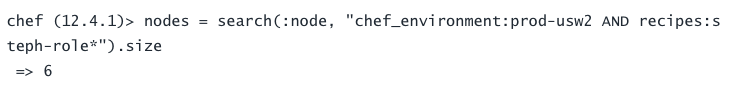

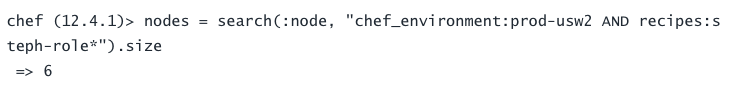

As I've mentioned, we use Chef to configure our services. We use "role cookbooks," which just means that every node gets exactly one item in its Chef "run list" and that item determines what "cluster" it's part of, which other cookbooks should be run there, and what configuration it should have. Nodes can also be looked up by what role they are assigned using a feature called Chef Search.

Our Cassandra 1.2 cluster was called `cassandra-role` - original, I know. The new "role" for Cassandra 2.0 was named `steph-role`. We started giving clusters names independent of the actual service they were running about 6 months ago to accommodate multiple clusters running different versions of the same service. `steph-role` was named after Steph Curry, the basketball player, because he was in the news a lot for being awesome when I was first starting work on the Cassandra 2.0 role.

Previously, we had used simple lists of IP addresses stored in Chef attributes; the cluster grew infrequently, so it was easy enough to add an IP to a bunch of lists, then let Chef push the new lists out to clients. It was definitely technical debt waiting for an opportunity. This upgrade offered just the right excuse.

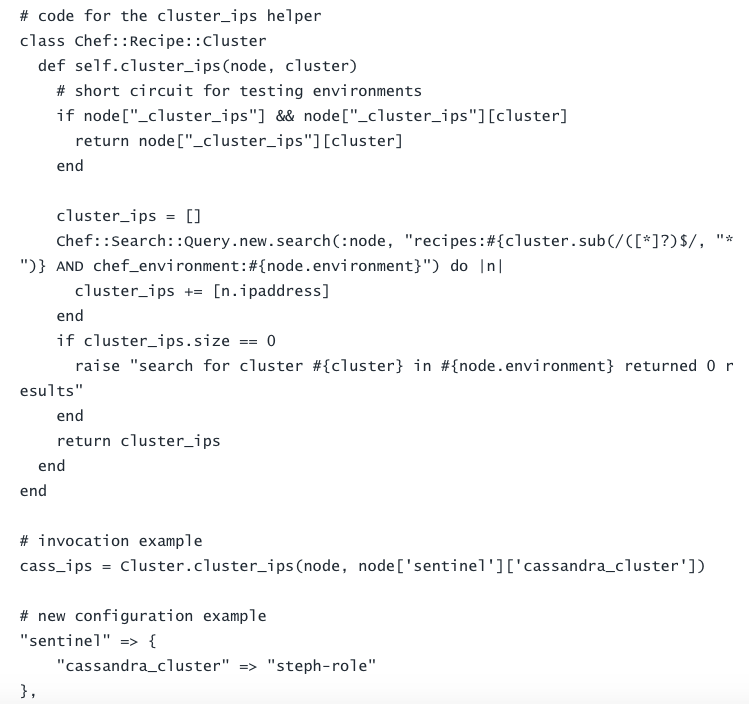

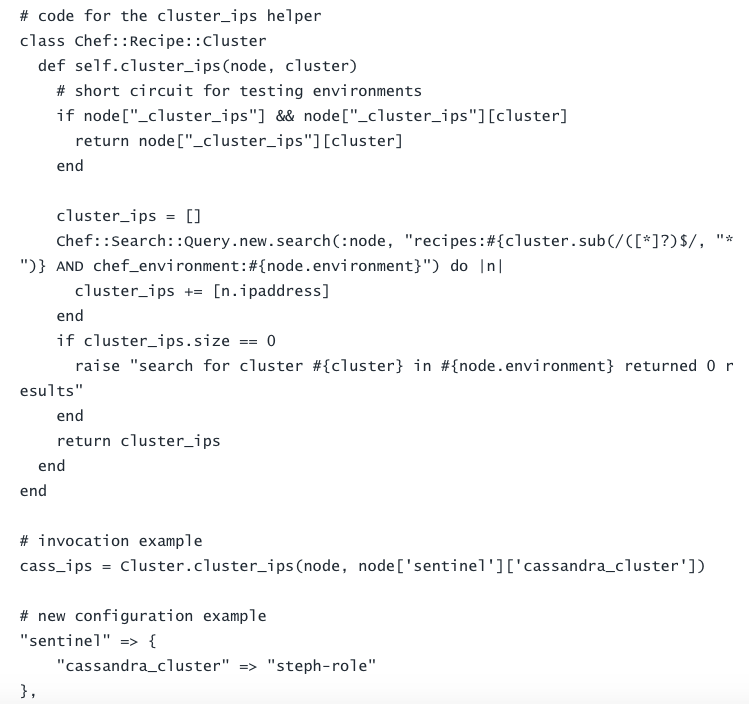

We wrote a simple helper function called `cluster_ips`. It takes two arguments: the environment (e.g. production, staging, etc) and the cluster. It returns the IP addresses of all the nodes returned by the corresponding Chef Search query. We then replaced all the hard-coded IP lists with calls to `cluster_ips`. Instead of a list of IPs, every service's Chef definition would now contain an attribute to specify the name of the Cassandra cluster to look up using `cluster_ips`. Initially, they were all set to `cassandra-role`. We would switch them to `steph-role` one at a time.

During the upgrade, the cluster would straddle two "roles" until everything switched from `cassandra-role` to `steph-role`. We could switch the lookup to `steph-role` for some services early in the upgrade to make sure everything still worked well when communicating with the new version of Cassandra and be able to switch back in a hurry if things went awry.

The Staging Upgrade

With the above changes in place, we were ready to go! We switched one of our staging Cassandra nodes to `steph-role` and ran Chef on it. Clients promptly stopped sending traffic to it because it disappeared from the search result for `cassandra-role`, but it kept serving reads as part of requests coordinated by the 1.2 nodes.

Of course, not everything was so smooth. We had missed an attribute in the new 2.x cookbook causing the location of Cassandra's data directories to be in a different part of the filesystem. The node started up pointing to an empty directory. Since we do reads and writes with `QUORUM` consistency level (2 out of 3 replicas have to acknowledge every operation), this isn't the most disastrous issue - the other replicas will continue to serve correct data and eventually "read repair" the data on the confused node - but it's certainly far from ideal. Furthermore, in our production environment, this would have resulted in data being written to the <10GB root partition of our EC2 instances.

Long story short, our fears related to the cookbook switch were reinforced, so we set about combing over the rest of the config even more carefully to avoid any further “excitement.” The rest of the config looked good, and all our service checks were successful as we switched them over to talk to the upgraded node one at a time. We then upgraded the rest of the staging cluster without incident.

The Production Upgrade - Extra Paranoia

Recall that the thing we feared the most was some sort of "network" effect where the upgraded node caused some unforeseen issue affecting the whole cluster. We felt a lot better about this possibility after successfully completing the staging upgrade, but we still wanted to be extra careful in prod.

The firewall is a very useful tool when working with distributed systems. It can unequivocally cut off communication between nodes in a cluster. This is extremely useful both when testing the failure modes of such a system and when trying to limit the potential impact of a bad cluster member. Since we use EC2, we get to use Security Groups for this purpose, making it very easy to apply and remove rule sets. We have a SG called `prod-cassandra` which opens only Cassandra specific ports to clients and other nodes in the same SG. Removing it from a node effectively cuts it off from the rest of the cluster and from client traffic.

Before upgrading our production node, we did just that.

This allows us to bring the node up cut off from its peers and verify that it is behaving sanely: it thinks it's part of the right cluster, has the correct data, is otherwise properly configured, etc. It's also just reassuring to see a distributed database properly acting out its failure modes. We don't do as many “game days” as we’d like, so this was a nice excuse to see what Cassandra actually did in this situation.

We proceeded to switch the node’s Chef run list from `cassandra-role` to `steph-role`, causing Chef’s next run to upgrade it to Cassandra 2.0. At this point we discovered a critical difference between staging and production that we had previously missed: production hosts store their data files on two volumes. The version of the community cookbook we had been using did not support this, so we had to stop and upgrade to a later release. The cookbook had since changed names, so some dependency wrangling was required. Fortunately, we use

Test Kitchen to test and validate all our roles, so it didn’t take very long.

After that brief exciting detour, the next Chef run did exactly what it was supposed to. The node came online with the memory of a 6 node cluster to which it could no longer communicate, with the correct token ring ownership and the correct amount of data. It was able to respond to queries with consistency level `ONE`.

A step I wish we had taken with 20/20 hindsight was to perform N random queries to random tables after the node had been cut off from its peers, but before it had been upgraded so that we could compare the output before and after. Next time.

After a few more less-than-scientific sanity checks of the newly-upgraded node, we took a deep deep deep deep breath and opened the firewall. The upgraded node found its friends and started serving read traffic. Everything looked splendid!

We did one more node using the same pattern. When we had two fully operational 2.0 nodes (enough to handle part of the client traffic), we switched some of our less critical, lower throughput services to use them as clients. All automated checks and manual QA continued to come back positive. As we continued upgrading nodes, we ended up in a position where only 2 nodes were left on the 1.2 side of the fence, still performing all the client traffic for our busiest services. It was time to cut those over to the 2.0 nodes. This created some very cool “graph art”:

You can see that internally, Cassandra was coordinating the requests evenly around the cluster, but the client traffic was predominantly going to the two pre-upgrade nodes. Something about seeing the actions you’re taking re-told in graphs is magical.

Conclusion

All in all, the upgrade went very well. It took a total of 4 days because we only upgraded nodes during low-usage times. We also made sure that every member of the backend team got to perform the upgrade on at least one of the nodes so that they’d become more familiar with our setup. Scheduling this caused the last couple of nodes to take a bit longer.

We returned only a handful of errors related to the upgrade. This was due to forgetting to run `nodetool drain` on the host being upgraded. The command tells the node to finish serving any outstanding requests, reject any new ones, and flush all in-memory buffers to disk. It’s the correct way to gracefully take a node out of operation. Once we added that to the upgrade checklist, the rest of the nodes restarted cleanly.

The first commits towards a 2.0 cookbook were made in early June. We then pushed the upgrade back until around July 20th, at which point we started actively researching and preparing the process. The step-by-step upgrade plan was started on July 23rd, the bulk of it written that week. The upgrade was completed on July 30th.

The new way to look up Cassandra hosts using Chef Search did contribute to a brief outage a couple of days after the upgrade was complete.. but that’s a story for another time.

Be safe out there!

Our Cassandra 1.2 cluster was called `cassandra-role` - original, I know. The new "role" for Cassandra 2.0 was named `steph-role`. We started giving clusters names independent of the actual service they were running about 6 months ago to accommodate multiple clusters running different versions of the same service. `steph-role` was named after Steph Curry, the basketball player, because he was in the news a lot for being awesome when I was first starting work on the Cassandra 2.0 role.

Previously, we had used simple lists of IP addresses stored in Chef attributes; the cluster grew infrequently, so it was easy enough to add an IP to a bunch of lists, then let Chef push the new lists out to clients. It was definitely technical debt waiting for an opportunity. This upgrade offered just the right excuse.

Our Cassandra 1.2 cluster was called `cassandra-role` - original, I know. The new "role" for Cassandra 2.0 was named `steph-role`. We started giving clusters names independent of the actual service they were running about 6 months ago to accommodate multiple clusters running different versions of the same service. `steph-role` was named after Steph Curry, the basketball player, because he was in the news a lot for being awesome when I was first starting work on the Cassandra 2.0 role.

Previously, we had used simple lists of IP addresses stored in Chef attributes; the cluster grew infrequently, so it was easy enough to add an IP to a bunch of lists, then let Chef push the new lists out to clients. It was definitely technical debt waiting for an opportunity. This upgrade offered just the right excuse.

We wrote a simple helper function called `cluster_ips`. It takes two arguments: the environment (e.g. production, staging, etc) and the cluster. It returns the IP addresses of all the nodes returned by the corresponding Chef Search query. We then replaced all the hard-coded IP lists with calls to `cluster_ips`. Instead of a list of IPs, every service's Chef definition would now contain an attribute to specify the name of the Cassandra cluster to look up using `cluster_ips`. Initially, they were all set to `cassandra-role`. We would switch them to `steph-role` one at a time.

We wrote a simple helper function called `cluster_ips`. It takes two arguments: the environment (e.g. production, staging, etc) and the cluster. It returns the IP addresses of all the nodes returned by the corresponding Chef Search query. We then replaced all the hard-coded IP lists with calls to `cluster_ips`. Instead of a list of IPs, every service's Chef definition would now contain an attribute to specify the name of the Cassandra cluster to look up using `cluster_ips`. Initially, they were all set to `cassandra-role`. We would switch them to `steph-role` one at a time.

During the upgrade, the cluster would straddle two "roles" until everything switched from `cassandra-role` to `steph-role`. We could switch the lookup to `steph-role` for some services early in the upgrade to make sure everything still worked well when communicating with the new version of Cassandra and be able to switch back in a hurry if things went awry.

The Staging Upgrade

With the above changes in place, we were ready to go! We switched one of our staging Cassandra nodes to `steph-role` and ran Chef on it. Clients promptly stopped sending traffic to it because it disappeared from the search result for `cassandra-role`, but it kept serving reads as part of requests coordinated by the 1.2 nodes.

Of course, not everything was so smooth. We had missed an attribute in the new 2.x cookbook causing the location of Cassandra's data directories to be in a different part of the filesystem. The node started up pointing to an empty directory. Since we do reads and writes with `QUORUM` consistency level (2 out of 3 replicas have to acknowledge every operation), this isn't the most disastrous issue - the other replicas will continue to serve correct data and eventually "read repair" the data on the confused node - but it's certainly far from ideal. Furthermore, in our production environment, this would have resulted in data being written to the <10GB root partition of our EC2 instances.

Long story short, our fears related to the cookbook switch were reinforced, so we set about combing over the rest of the config even more carefully to avoid any further “excitement.” The rest of the config looked good, and all our service checks were successful as we switched them over to talk to the upgraded node one at a time. We then upgraded the rest of the staging cluster without incident.

The Production Upgrade - Extra Paranoia

Recall that the thing we feared the most was some sort of "network" effect where the upgraded node caused some unforeseen issue affecting the whole cluster. We felt a lot better about this possibility after successfully completing the staging upgrade, but we still wanted to be extra careful in prod.

The firewall is a very useful tool when working with distributed systems. It can unequivocally cut off communication between nodes in a cluster. This is extremely useful both when testing the failure modes of such a system and when trying to limit the potential impact of a bad cluster member. Since we use EC2, we get to use Security Groups for this purpose, making it very easy to apply and remove rule sets. We have a SG called `prod-cassandra` which opens only Cassandra specific ports to clients and other nodes in the same SG. Removing it from a node effectively cuts it off from the rest of the cluster and from client traffic.

Before upgrading our production node, we did just that.

During the upgrade, the cluster would straddle two "roles" until everything switched from `cassandra-role` to `steph-role`. We could switch the lookup to `steph-role` for some services early in the upgrade to make sure everything still worked well when communicating with the new version of Cassandra and be able to switch back in a hurry if things went awry.

The Staging Upgrade

With the above changes in place, we were ready to go! We switched one of our staging Cassandra nodes to `steph-role` and ran Chef on it. Clients promptly stopped sending traffic to it because it disappeared from the search result for `cassandra-role`, but it kept serving reads as part of requests coordinated by the 1.2 nodes.

Of course, not everything was so smooth. We had missed an attribute in the new 2.x cookbook causing the location of Cassandra's data directories to be in a different part of the filesystem. The node started up pointing to an empty directory. Since we do reads and writes with `QUORUM` consistency level (2 out of 3 replicas have to acknowledge every operation), this isn't the most disastrous issue - the other replicas will continue to serve correct data and eventually "read repair" the data on the confused node - but it's certainly far from ideal. Furthermore, in our production environment, this would have resulted in data being written to the <10GB root partition of our EC2 instances.

Long story short, our fears related to the cookbook switch were reinforced, so we set about combing over the rest of the config even more carefully to avoid any further “excitement.” The rest of the config looked good, and all our service checks were successful as we switched them over to talk to the upgraded node one at a time. We then upgraded the rest of the staging cluster without incident.

The Production Upgrade - Extra Paranoia

Recall that the thing we feared the most was some sort of "network" effect where the upgraded node caused some unforeseen issue affecting the whole cluster. We felt a lot better about this possibility after successfully completing the staging upgrade, but we still wanted to be extra careful in prod.

The firewall is a very useful tool when working with distributed systems. It can unequivocally cut off communication between nodes in a cluster. This is extremely useful both when testing the failure modes of such a system and when trying to limit the potential impact of a bad cluster member. Since we use EC2, we get to use Security Groups for this purpose, making it very easy to apply and remove rule sets. We have a SG called `prod-cassandra` which opens only Cassandra specific ports to clients and other nodes in the same SG. Removing it from a node effectively cuts it off from the rest of the cluster and from client traffic.

Before upgrading our production node, we did just that.

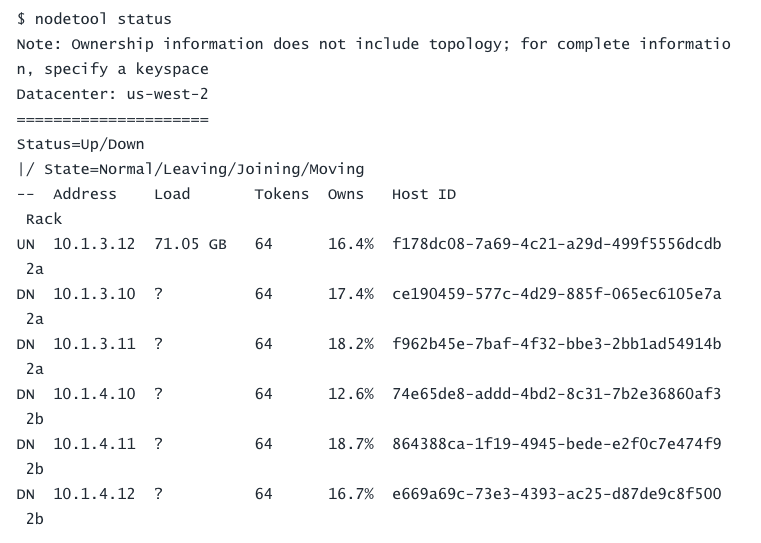

This allows us to bring the node up cut off from its peers and verify that it is behaving sanely: it thinks it's part of the right cluster, has the correct data, is otherwise properly configured, etc. It's also just reassuring to see a distributed database properly acting out its failure modes. We don't do as many “game days” as we’d like, so this was a nice excuse to see what Cassandra actually did in this situation.

We proceeded to switch the node’s Chef run list from `cassandra-role` to `steph-role`, causing Chef’s next run to upgrade it to Cassandra 2.0. At this point we discovered a critical difference between staging and production that we had previously missed: production hosts store their data files on two volumes. The version of the community cookbook we had been using did not support this, so we had to stop and upgrade to a later release. The cookbook had since changed names, so some dependency wrangling was required. Fortunately, we use Test Kitchen to test and validate all our roles, so it didn’t take very long.

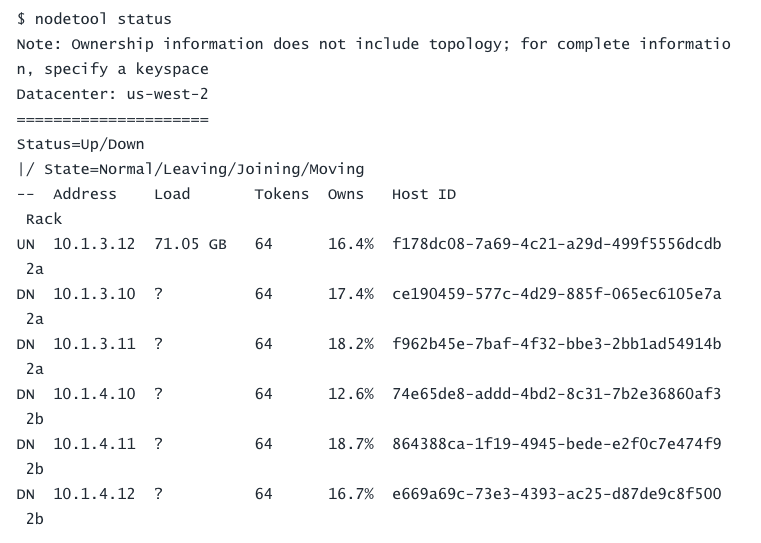

After that brief exciting detour, the next Chef run did exactly what it was supposed to. The node came online with the memory of a 6 node cluster to which it could no longer communicate, with the correct token ring ownership and the correct amount of data. It was able to respond to queries with consistency level `ONE`.

This allows us to bring the node up cut off from its peers and verify that it is behaving sanely: it thinks it's part of the right cluster, has the correct data, is otherwise properly configured, etc. It's also just reassuring to see a distributed database properly acting out its failure modes. We don't do as many “game days” as we’d like, so this was a nice excuse to see what Cassandra actually did in this situation.

We proceeded to switch the node’s Chef run list from `cassandra-role` to `steph-role`, causing Chef’s next run to upgrade it to Cassandra 2.0. At this point we discovered a critical difference between staging and production that we had previously missed: production hosts store their data files on two volumes. The version of the community cookbook we had been using did not support this, so we had to stop and upgrade to a later release. The cookbook had since changed names, so some dependency wrangling was required. Fortunately, we use Test Kitchen to test and validate all our roles, so it didn’t take very long.

After that brief exciting detour, the next Chef run did exactly what it was supposed to. The node came online with the memory of a 6 node cluster to which it could no longer communicate, with the correct token ring ownership and the correct amount of data. It was able to respond to queries with consistency level `ONE`.

A step I wish we had taken with 20/20 hindsight was to perform N random queries to random tables after the node had been cut off from its peers, but before it had been upgraded so that we could compare the output before and after. Next time.

After a few more less-than-scientific sanity checks of the newly-upgraded node, we took a deep deep deep deep breath and opened the firewall. The upgraded node found its friends and started serving read traffic. Everything looked splendid!

We did one more node using the same pattern. When we had two fully operational 2.0 nodes (enough to handle part of the client traffic), we switched some of our less critical, lower throughput services to use them as clients. All automated checks and manual QA continued to come back positive. As we continued upgrading nodes, we ended up in a position where only 2 nodes were left on the 1.2 side of the fence, still performing all the client traffic for our busiest services. It was time to cut those over to the 2.0 nodes. This created some very cool “graph art”:

A step I wish we had taken with 20/20 hindsight was to perform N random queries to random tables after the node had been cut off from its peers, but before it had been upgraded so that we could compare the output before and after. Next time.

After a few more less-than-scientific sanity checks of the newly-upgraded node, we took a deep deep deep deep breath and opened the firewall. The upgraded node found its friends and started serving read traffic. Everything looked splendid!

We did one more node using the same pattern. When we had two fully operational 2.0 nodes (enough to handle part of the client traffic), we switched some of our less critical, lower throughput services to use them as clients. All automated checks and manual QA continued to come back positive. As we continued upgrading nodes, we ended up in a position where only 2 nodes were left on the 1.2 side of the fence, still performing all the client traffic for our busiest services. It was time to cut those over to the 2.0 nodes. This created some very cool “graph art”:

You can see that internally, Cassandra was coordinating the requests evenly around the cluster, but the client traffic was predominantly going to the two pre-upgrade nodes. Something about seeing the actions you’re taking re-told in graphs is magical.

Conclusion

All in all, the upgrade went very well. It took a total of 4 days because we only upgraded nodes during low-usage times. We also made sure that every member of the backend team got to perform the upgrade on at least one of the nodes so that they’d become more familiar with our setup. Scheduling this caused the last couple of nodes to take a bit longer.

We returned only a handful of errors related to the upgrade. This was due to forgetting to run `nodetool drain` on the host being upgraded. The command tells the node to finish serving any outstanding requests, reject any new ones, and flush all in-memory buffers to disk. It’s the correct way to gracefully take a node out of operation. Once we added that to the upgrade checklist, the rest of the nodes restarted cleanly.

The first commits towards a 2.0 cookbook were made in early June. We then pushed the upgrade back until around July 20th, at which point we started actively researching and preparing the process. The step-by-step upgrade plan was started on July 23rd, the bulk of it written that week. The upgrade was completed on July 30th.

The new way to look up Cassandra hosts using Chef Search did contribute to a brief outage a couple of days after the upgrade was complete.. but that’s a story for another time.

Be safe out there!

You can see that internally, Cassandra was coordinating the requests evenly around the cluster, but the client traffic was predominantly going to the two pre-upgrade nodes. Something about seeing the actions you’re taking re-told in graphs is magical.

Conclusion

All in all, the upgrade went very well. It took a total of 4 days because we only upgraded nodes during low-usage times. We also made sure that every member of the backend team got to perform the upgrade on at least one of the nodes so that they’d become more familiar with our setup. Scheduling this caused the last couple of nodes to take a bit longer.

We returned only a handful of errors related to the upgrade. This was due to forgetting to run `nodetool drain` on the host being upgraded. The command tells the node to finish serving any outstanding requests, reject any new ones, and flush all in-memory buffers to disk. It’s the correct way to gracefully take a node out of operation. Once we added that to the upgrade checklist, the rest of the nodes restarted cleanly.

The first commits towards a 2.0 cookbook were made in early June. We then pushed the upgrade back until around July 20th, at which point we started actively researching and preparing the process. The step-by-step upgrade plan was started on July 23rd, the bulk of it written that week. The upgrade was completed on July 30th.

The new way to look up Cassandra hosts using Chef Search did contribute to a brief outage a couple of days after the upgrade was complete.. but that’s a story for another time.

Be safe out there!