We all want our systems to have high availability, but sometimes the exact meaning of “high availability” isn't very clearly defined. However, availability -- like scalability, performance, and so on -- can be expressed as a mathematical function; it can be viewed in quantifiable and digestible terms. In this post, I’ll explain which parameters truly influence availability: an extremely useful concept to understand, as it enables you to focus your efforts in the right places and achieve higher availability instead of just spinning wheels.

What Is Availability?

Availability, simply put, is the absence of downtime. Other, more specialized definitions of availability vary between organizations, but in its purest sense, that’s what it boils down to: the elimination of downtime.

When we speak of “high” availability, we’re usually referring to the percentage of time the resource of interest is available; this percentage usually takes the form of a series of 9’s. For instance, the vaunted “five nines” refers to 99.999% availability, which translates to about five minutes of downtime per year.

Sometimes it’s not enough to measure availability as a binary up-or-down, on-or-off, working-or-not. A lot of availability checks will consider something to be “up” if it’s simply responding to requests, but that’s not useful enough for many (most?) purposes. A more useful definition of downtime also includes slow performance. So a resource is only considered available if it's up and it meets a service level objective, which is best defined as a percentile over intervals of time (e.g. 99.9th percentile response time is less than 5ms in every 5-minute interval).

Combine this service level objective with five nines, and we have a difficult (but desirable) goal to hit: five nines availability means 99.9th percentile response time -- less than 5ms -- for all but one 5-minute period in the course of a year. If you’re willing to pay for it, you can probably achieve such a high standard, but deciding to do so is a business decision, not solely a technical one.

What Is the Availability Function?

Availability is a combination of how often things are unavailable and how long they remain that way. These two factors are often abbreviated as MTBF (mean time between failures) and MTTR (mean time to recovery).

MTTR and MTBF are the independent variables in the availability function, A:

You can also derive some other functions from this one, including the probability of failure F, which is 1-A; and reliability (R) which is 1/F, or 1/(1-A).

But the availability function A is interesting to examine in more detail.

You can also derive some other functions from this one, including the probability of failure F, which is 1-A; and reliability (R) which is 1/F, or 1/(1-A).

But the availability function A is interesting to examine in more detail.

What Matters More: MTTR or MTBF?

If you want to achieve high availability, what should you strive for—short outages, or rare outages? To answer this question, let’s first look at the shape of the availability function.

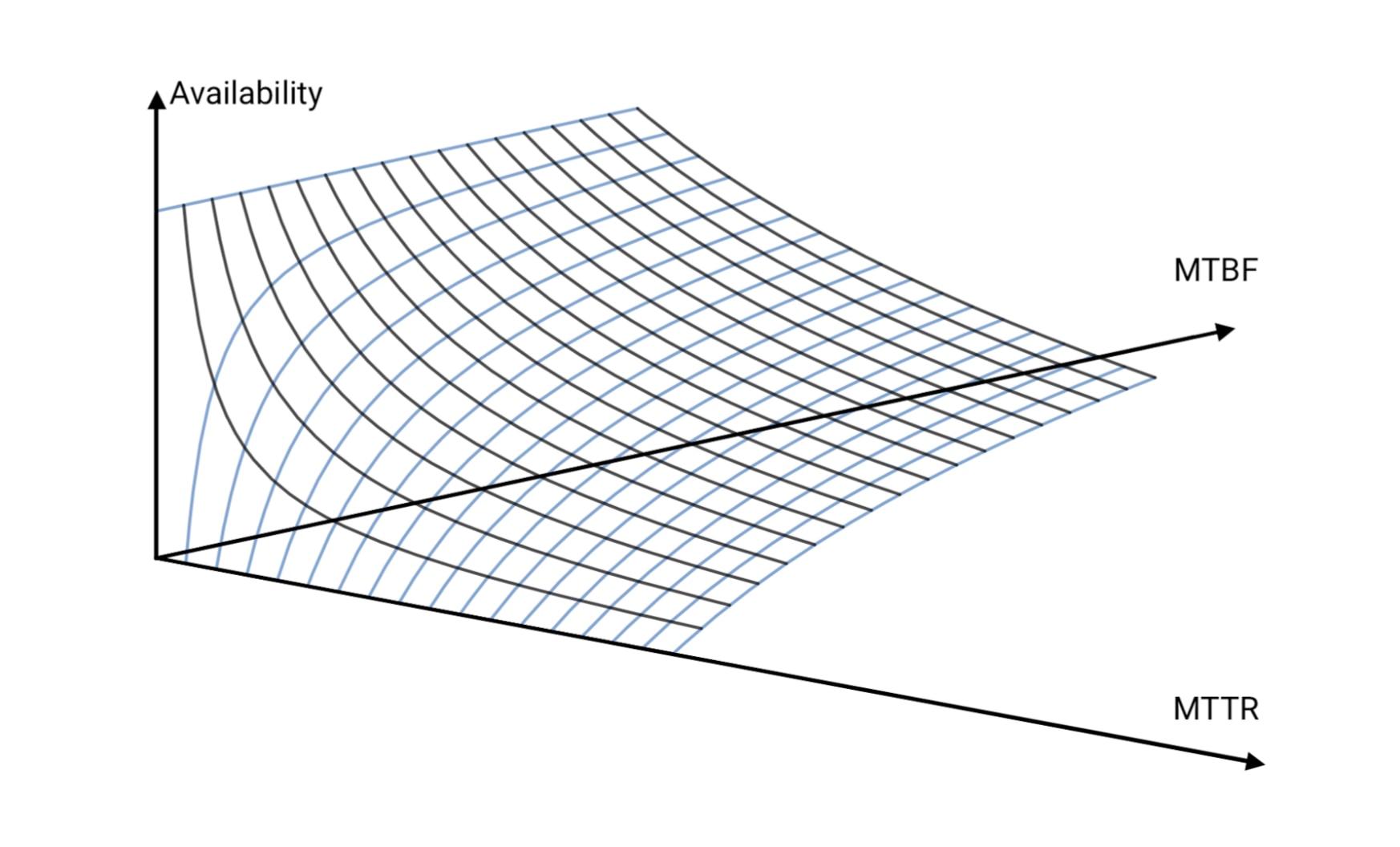

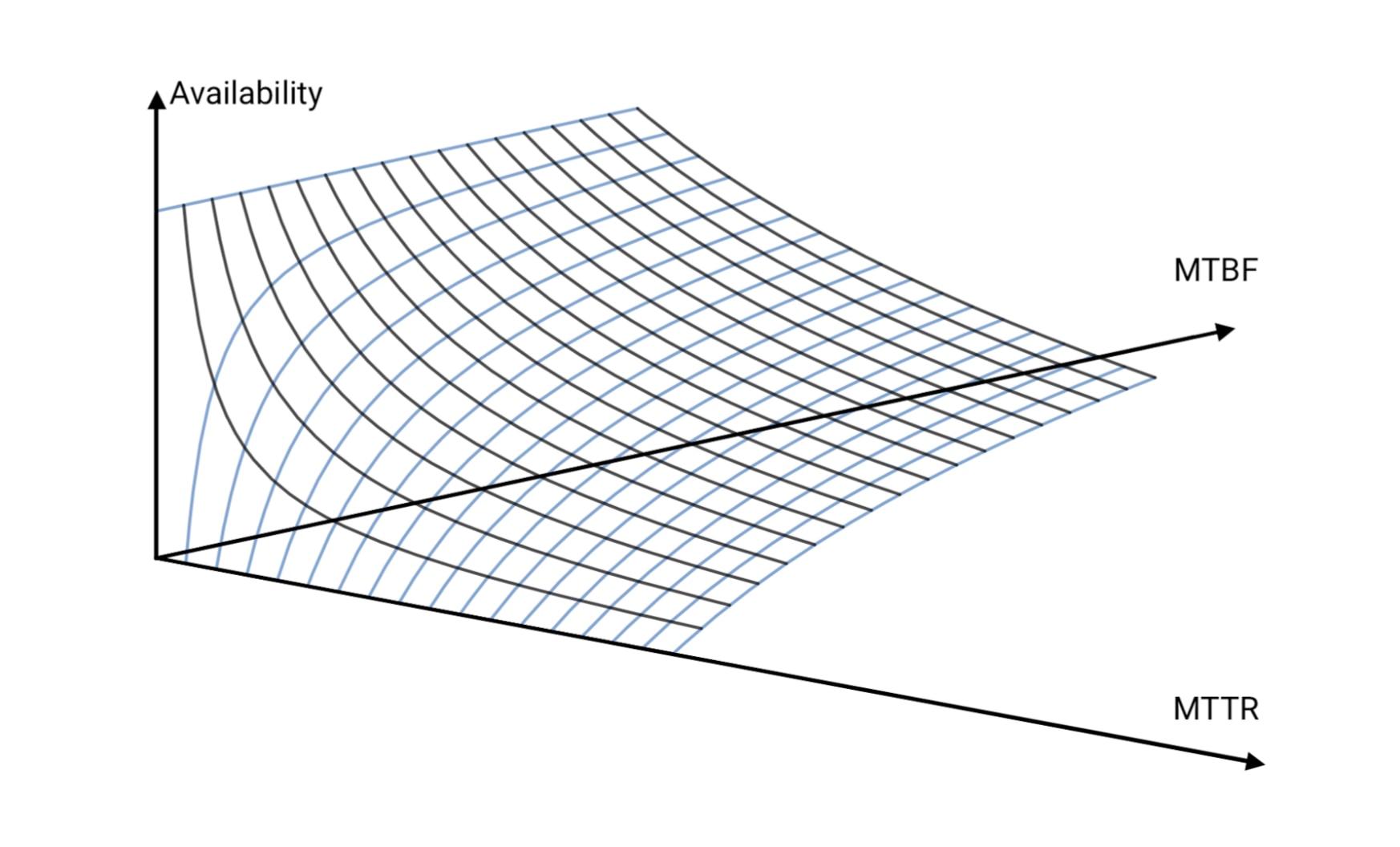

This is a graphical representation of the function, which shows availability is best when MTTR is short and MTBF is long. If you’d like to see an interactive version of this plot, you can find one at Desmos.

It’s obvious from both the chart and the equation that the limit of availability is 1 as MTTR decreases to 0, and as MTBF increases to infinity.

I’ve heard it said that a short MTTR is much more important than a long MTBF, but in my opinion that’s not something you can state categorically. Resources don’t exist in isolation. For example, there are lots of cascading effects in most applications. A resource that constantly goes down and recovers quickly might trigger expensive failure handling logic in things that depend on it (e.g. TCP setup, destroying and recreating processes or state).

In addition, depending on what’s within your control (commercial, off-the-shelf software versus something you can change), and what’s expensive or cheap (stateful systems like databases aren’t easy to recover or replace due to the cost of providing them a copy of data to work with), you might find one factor easier to influence than the other.

So what should you focus on—fast recovery, or rare outages? Both? One or the other? It depends!

This is a graphical representation of the function, which shows availability is best when MTTR is short and MTBF is long. If you’d like to see an interactive version of this plot, you can find one at Desmos.

It’s obvious from both the chart and the equation that the limit of availability is 1 as MTTR decreases to 0, and as MTBF increases to infinity.

I’ve heard it said that a short MTTR is much more important than a long MTBF, but in my opinion that’s not something you can state categorically. Resources don’t exist in isolation. For example, there are lots of cascading effects in most applications. A resource that constantly goes down and recovers quickly might trigger expensive failure handling logic in things that depend on it (e.g. TCP setup, destroying and recreating processes or state).

In addition, depending on what’s within your control (commercial, off-the-shelf software versus something you can change), and what’s expensive or cheap (stateful systems like databases aren’t easy to recover or replace due to the cost of providing them a copy of data to work with), you might find one factor easier to influence than the other.

So what should you focus on—fast recovery, or rare outages? Both? One or the other? It depends!

The Importance of Fast Detection

It’s possible to subdivide MTTR itself into two deeper components: detection time (MTTD, or mean time to detect) and remediation time. MTTR, ultimately, is the sum of the two. So even if remediation time is instantaneous, MTTR will never be less than MTTD, because you can’t fix something if you don’t detect it first.

That means it's vitally important to minimize MTTD. This is harder than it might seem. The quicker you are to declare an outage, the more likely you are to raise a false alarm. False alarms are, in turn, a lot more harmful than you might think.

What can you do? That’s a domain-specific question, but we know a little bit about detecting problems—especially performance problems—in databases:

- Focus on measuring what matters. In the database, this is obviously queries.

- Measure what breaks most. In the database, again, this is queries. Queries are the single most common cause of database performance problems. Not configuration and not externalities, such as hardware or the network.

- Measure in high resolution.

- Capture potential problems early, but alert only on actionable problems. Adaptive fault detection is a specialized form of anomaly detection for query performance issues.

In other types of systems, you’re likely to establish different criteria, but it’s always going to be the case that high monitoring resolution, sophisticated anomaly detection (if it actually works, which is often not the case), and a focus on measuring the system performing its job (and not just measuring what it offers you for measurement) are vital first principles.

Conclusions

In a lot of ways, the pursuit of high availability represents the highest standards of quality and consistency to which you can possibly hold a system. Consider everything that falls within the purview of attaining high availability: recognizing how a database might fail; recognizing what can be monitored to increase detection speed; recovering from hiccups as quickly as possible; avoiding hiccups altogether; ascertaining the percentile level of performance that the system achieves even when it’s

not failing.

Talking about availability in reference to a five nines standard -- 99.999% -- is an indication of the extremely high expectations that top notch databases are held to. Again, that’s only five minutes of downtime out of 525,600 minutes in a year. This standard isn’t an utter pipe dream -- it’s within reach, and it’s absolutely worth pursuing. But high availability isn’t achieved through luck, and high database standards in general take a great deal of preparation, insight, and utilization of the correct tools. When these aspects are in place, the overall quality and reliability of your system will naturally follow.

You can also derive some other functions from this one, including the probability of failure F, which is 1-A; and reliability (R) which is 1/F, or 1/(1-A).

But the availability function A is interesting to examine in more detail.

You can also derive some other functions from this one, including the probability of failure F, which is 1-A; and reliability (R) which is 1/F, or 1/(1-A).

But the availability function A is interesting to examine in more detail.

This is a graphical representation of the function, which shows availability is best when MTTR is short and MTBF is long. If you’d like to see an interactive version of this plot, you can find one at Desmos.

It’s obvious from both the chart and the equation that the limit of availability is 1 as MTTR decreases to 0, and as MTBF increases to infinity.

I’ve heard it said that a short MTTR is much more important than a long MTBF, but in my opinion that’s not something you can state categorically. Resources don’t exist in isolation. For example, there are lots of cascading effects in most applications. A resource that constantly goes down and recovers quickly might trigger expensive failure handling logic in things that depend on it (e.g. TCP setup, destroying and recreating processes or state).

In addition, depending on what’s within your control (commercial, off-the-shelf software versus something you can change), and what’s expensive or cheap (stateful systems like databases aren’t easy to recover or replace due to the cost of providing them a copy of data to work with), you might find one factor easier to influence than the other.

So what should you focus on—fast recovery, or rare outages? Both? One or the other? It depends!

This is a graphical representation of the function, which shows availability is best when MTTR is short and MTBF is long. If you’d like to see an interactive version of this plot, you can find one at Desmos.

It’s obvious from both the chart and the equation that the limit of availability is 1 as MTTR decreases to 0, and as MTBF increases to infinity.

I’ve heard it said that a short MTTR is much more important than a long MTBF, but in my opinion that’s not something you can state categorically. Resources don’t exist in isolation. For example, there are lots of cascading effects in most applications. A resource that constantly goes down and recovers quickly might trigger expensive failure handling logic in things that depend on it (e.g. TCP setup, destroying and recreating processes or state).

In addition, depending on what’s within your control (commercial, off-the-shelf software versus something you can change), and what’s expensive or cheap (stateful systems like databases aren’t easy to recover or replace due to the cost of providing them a copy of data to work with), you might find one factor easier to influence than the other.

So what should you focus on—fast recovery, or rare outages? Both? One or the other? It depends!