Sometimes, when working with a top-of-the-line monitoring tool, having access to the best possible visibility, granularity, and reliability is simply not enough. Sure, those features represent the ultimate goals in monitoring, but, in this case, the ends do not necessarily justify the means. What about costs? As we provide a tool that achieves ambitious bottom line functionality, we also need to pay close attention to any burden that SolarWinds® Database Performance Monitor (DPM) might impose on users’ systems. We need to mitigate and minimize those costs. And we need to make sure that SolarWinds DPM is as efficient as it is powerful... especially when the onrush of data gets intense.

With those considerations in mind, SolarWinds DPM agents need to be as lightweight as possible. Ideally, we’re able to eliminate any effect that SolarWinds DPM has on servers. We’ve taken conscious and careful steps toward that objective, to reduce SolarWinds DPM’s strain on CPU, memory, and disk I/O; we are also conscientious of how much data we send over the network.

But it can be tricky to meet these high standards, just by virtue of what SolarWinds DPM requires to operate (lots of data); by the sheer mass of how much information we’re transmitting; and by the frequency of our measurements. Obviously, we need to send data to our datacenter to get metrics. We capture thousands of metrics from dozens or hundreds of servers. This can add up to a lot of bandwidth.

So, if we can reduce the actual amount of data we’re sending, the better it is overall, for both us and our users.

Enter Compression

The vc-aggregator is the agent responsible for sending metrics from our users’ servers directly to SolarWinds DPM. The aggregator receives data from the other agents and buffers metrics, up to a minute a time. Then, once per minute, we pull the buffer from the prior minute and have it sent to us in random, broken-down segments. The randomness and segmentation is important as they keep our reception of data even and prevent us from overloading our own systems and forcing ourselves into a DoS (doh!).

We have a few strategies for keeping payloads small. First of all, we make sure that our data is not defined in overly verbose terms or unnecessary phrasing. We express metrics in JSON, as field names and values. The field names themselves are truncated, ensuring that we don’t waste extra bytes from the outset. We then streamline by opting not to send any value equal to 0, saving space on sparse data.

We also make massive cuts on system load in the way we implement timestamps. Even though we achieve and offer 1-second granularity, we only actually send timestamps once per minute. From there, we use offsets as a way to locate in reference to pre-established stamps, cutting the required information significantly compared to the strain of sending a new, independent timestamp with each and every metric. Under each timestamp there appears a list of metrics, and each metric gets a list of points, an array, and a point is an offset and a value.

These principles mean that our systems are optimized for both brevity and repetition even before we apply general compression. Once we’ve defined timestamps and offsets, we serizlize with JSON and then use gzip. And thanks to compression and our own optimization to take advantage of it, we gain up to an additional 80% efficiency.

Turning on the Hose -- Compression in Action

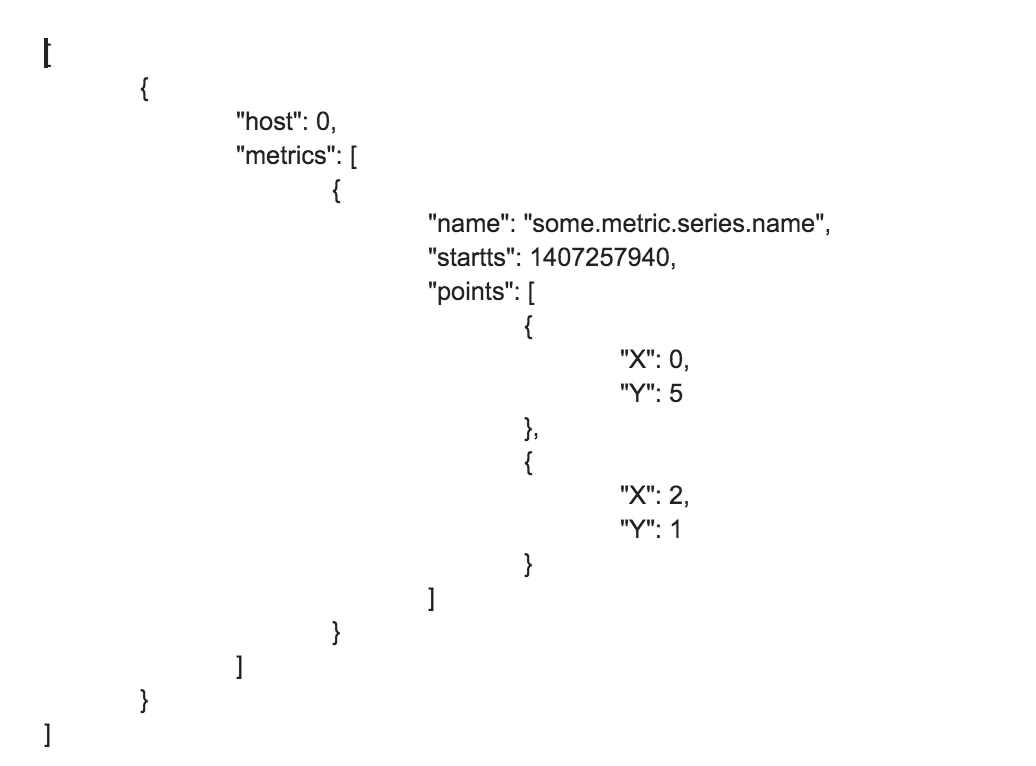

One version of a timeseries payload might look something like this:

This works, but it has a some unnecessary verbosity. We're specifying the timestamp for every point, and one of the points has a value of 0, which shouldn't be necessary. We can adjust the payload to make it naturally smaller.

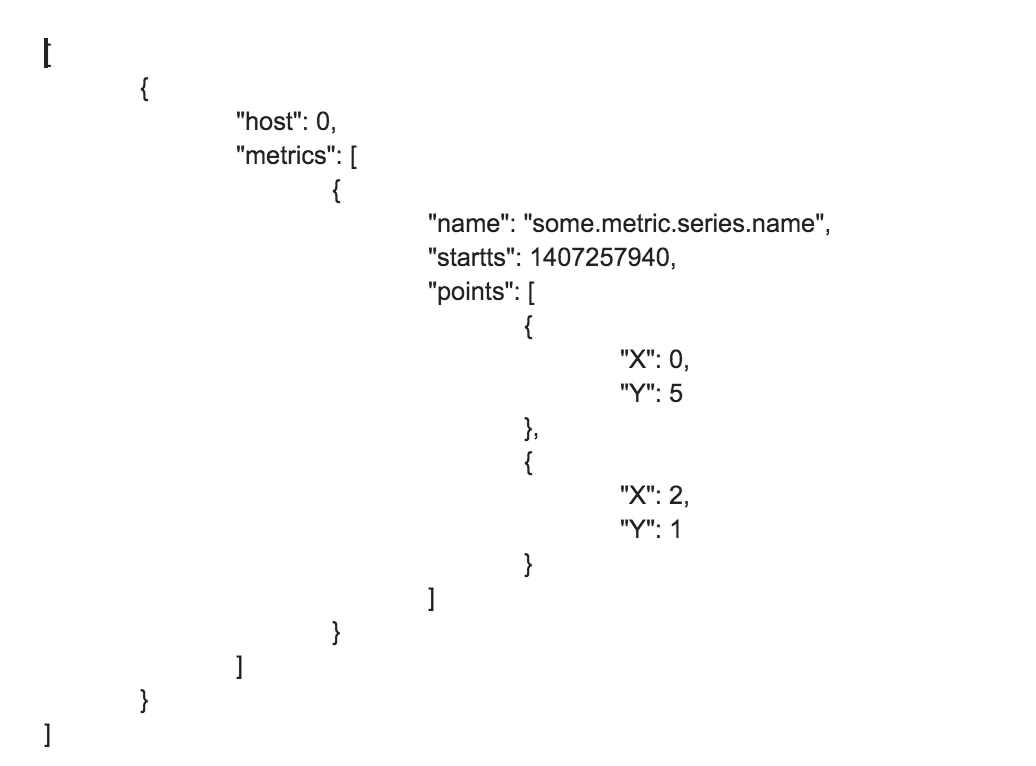

Here's an example of what a series of metrics looks like when it is sent to the API in SolarWinds DPM:

Notice that instead of using a timestamp for each "X" value, we specify a "startts" field instead, and each point’s "X" represents that point’s offset from the startts. This is less verbose to in execution and ultimately saves space. We've also eliminated the 0 value that was in our previous example from the output, so we don't need to fill in blanks. We'll also compress this payload with gzip before it is sent to our API for maximum savings.

In terms of space, how much do these strategies actually save us?

Let's say we have a payload of 100 different metrics, and approximately 1/6th of the points are 0 values. Using our initial naive structure, this ends up being 147914 bytes, or 144.45KB. If we change to the second format, with "X" values expressed as offsets of an initial values, that will bring us down to 101014 bytes, or 98.64KB. Now let's remove the X/Y pairs for the 0 value points, since they don't provide any useful data. Doing so brings the size to 91514 bytes, or 89.36KB. That's an additional 9.4% improvement... not too bad. The resultant data is highly repetitive and will compress nicely too. In fact, applying gzip compression to the payload brings its size down to 1051 bytes, for a total size improvement of 99.3%!

An astute reader might look at the initial vs. subsequent example and wonder if the changes are really worth the improvement. After all, the first one should compress pretty well on its own, shouldn't it? It does, in fact, do exactly that, compressing down to 1387 bytes in size. But is that really a significant amount? Well, relatively speaking, it's 32% larger than the result of our optimized payload. Admittedly, this is a somewhat contrived example, but in our own infrastructure we've seen about 20% improvement in size of traffic received using the second payload instead of the first, so it's definitely a huge help, and it's a cheap change to make.

When you're consuming many thousands of metrics every second, every bit of savings counts.

Notice that instead of using a timestamp for each "X" value, we specify a "startts" field instead, and each point’s "X" represents that point’s offset from the startts. This is less verbose to in execution and ultimately saves space. We've also eliminated the 0 value that was in our previous example from the output, so we don't need to fill in blanks. We'll also compress this payload with gzip before it is sent to our API for maximum savings.

In terms of space, how much do these strategies actually save us?

Let's say we have a payload of 100 different metrics, and approximately 1/6th of the points are 0 values. Using our initial naive structure, this ends up being 147914 bytes, or 144.45KB. If we change to the second format, with "X" values expressed as offsets of an initial values, that will bring us down to 101014 bytes, or 98.64KB. Now let's remove the X/Y pairs for the 0 value points, since they don't provide any useful data. Doing so brings the size to 91514 bytes, or 89.36KB. That's an additional 9.4% improvement... not too bad. The resultant data is highly repetitive and will compress nicely too. In fact, applying gzip compression to the payload brings its size down to 1051 bytes, for a total size improvement of 99.3%!

An astute reader might look at the initial vs. subsequent example and wonder if the changes are really worth the improvement. After all, the first one should compress pretty well on its own, shouldn't it? It does, in fact, do exactly that, compressing down to 1387 bytes in size. But is that really a significant amount? Well, relatively speaking, it's 32% larger than the result of our optimized payload. Admittedly, this is a somewhat contrived example, but in our own infrastructure we've seen about 20% improvement in size of traffic received using the second payload instead of the first, so it's definitely a huge help, and it's a cheap change to make.

When you're consuming many thousands of metrics every second, every bit of savings counts.

This works, but it has a some unnecessary verbosity. We're specifying the timestamp for every point, and one of the points has a value of 0, which shouldn't be necessary. We can adjust the payload to make it naturally smaller.

Here's an example of what a series of metrics looks like when it is sent to the API in SolarWinds DPM:

This works, but it has a some unnecessary verbosity. We're specifying the timestamp for every point, and one of the points has a value of 0, which shouldn't be necessary. We can adjust the payload to make it naturally smaller.

Here's an example of what a series of metrics looks like when it is sent to the API in SolarWinds DPM:

Notice that instead of using a timestamp for each "X" value, we specify a "startts" field instead, and each point’s "X" represents that point’s offset from the startts. This is less verbose to in execution and ultimately saves space. We've also eliminated the 0 value that was in our previous example from the output, so we don't need to fill in blanks. We'll also compress this payload with gzip before it is sent to our API for maximum savings.

In terms of space, how much do these strategies actually save us?

Let's say we have a payload of 100 different metrics, and approximately 1/6th of the points are 0 values. Using our initial naive structure, this ends up being 147914 bytes, or 144.45KB. If we change to the second format, with "X" values expressed as offsets of an initial values, that will bring us down to 101014 bytes, or 98.64KB. Now let's remove the X/Y pairs for the 0 value points, since they don't provide any useful data. Doing so brings the size to 91514 bytes, or 89.36KB. That's an additional 9.4% improvement... not too bad. The resultant data is highly repetitive and will compress nicely too. In fact, applying gzip compression to the payload brings its size down to 1051 bytes, for a total size improvement of 99.3%!

An astute reader might look at the initial vs. subsequent example and wonder if the changes are really worth the improvement. After all, the first one should compress pretty well on its own, shouldn't it? It does, in fact, do exactly that, compressing down to 1387 bytes in size. But is that really a significant amount? Well, relatively speaking, it's 32% larger than the result of our optimized payload. Admittedly, this is a somewhat contrived example, but in our own infrastructure we've seen about 20% improvement in size of traffic received using the second payload instead of the first, so it's definitely a huge help, and it's a cheap change to make.

When you're consuming many thousands of metrics every second, every bit of savings counts.

Notice that instead of using a timestamp for each "X" value, we specify a "startts" field instead, and each point’s "X" represents that point’s offset from the startts. This is less verbose to in execution and ultimately saves space. We've also eliminated the 0 value that was in our previous example from the output, so we don't need to fill in blanks. We'll also compress this payload with gzip before it is sent to our API for maximum savings.

In terms of space, how much do these strategies actually save us?

Let's say we have a payload of 100 different metrics, and approximately 1/6th of the points are 0 values. Using our initial naive structure, this ends up being 147914 bytes, or 144.45KB. If we change to the second format, with "X" values expressed as offsets of an initial values, that will bring us down to 101014 bytes, or 98.64KB. Now let's remove the X/Y pairs for the 0 value points, since they don't provide any useful data. Doing so brings the size to 91514 bytes, or 89.36KB. That's an additional 9.4% improvement... not too bad. The resultant data is highly repetitive and will compress nicely too. In fact, applying gzip compression to the payload brings its size down to 1051 bytes, for a total size improvement of 99.3%!

An astute reader might look at the initial vs. subsequent example and wonder if the changes are really worth the improvement. After all, the first one should compress pretty well on its own, shouldn't it? It does, in fact, do exactly that, compressing down to 1387 bytes in size. But is that really a significant amount? Well, relatively speaking, it's 32% larger than the result of our optimized payload. Admittedly, this is a somewhat contrived example, but in our own infrastructure we've seen about 20% improvement in size of traffic received using the second payload instead of the first, so it's definitely a huge help, and it's a cheap change to make.

When you're consuming many thousands of metrics every second, every bit of savings counts.